-

18 Introduction

-

18.1 Samplers

- FTP Request

- HTTP Request

- JDBC Request

- Java Request

- LDAP Request

- LDAP Extended Request

- Access Log Sampler

- BeanShell Sampler

- JSR223 Sampler

- TCP Sampler

- JMS Publisher

- JMS Subscriber

- JMS Point-to-Point

- JUnit Request

- Mail Reader Sampler

- Flow Control Action (was: Test Action )

- SMTP Sampler

- OS Process Sampler

- MongoDB Script (DEPRECATED)

- Bolt Request

-

18.2 Logic Controllers

- Simple Controller

- Loop Controller

- Once Only Controller

- Interleave Controller

- Random Controller

- Random Order Controller

- Throughput Controller

- Runtime Controller

- If Controller

- While Controller

- Switch Controller

- ForEach Controller

- Module Controller

- Include Controller

- Transaction Controller

- Recording Controller

- Critical Section Controller

-

18.3 Listeners

- Sample Result Save Configuration

- Graph Results

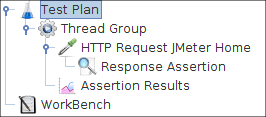

- Assertion Results

- View Results Tree

- Aggregate Report

- View Results in Table

- Simple Data Writer

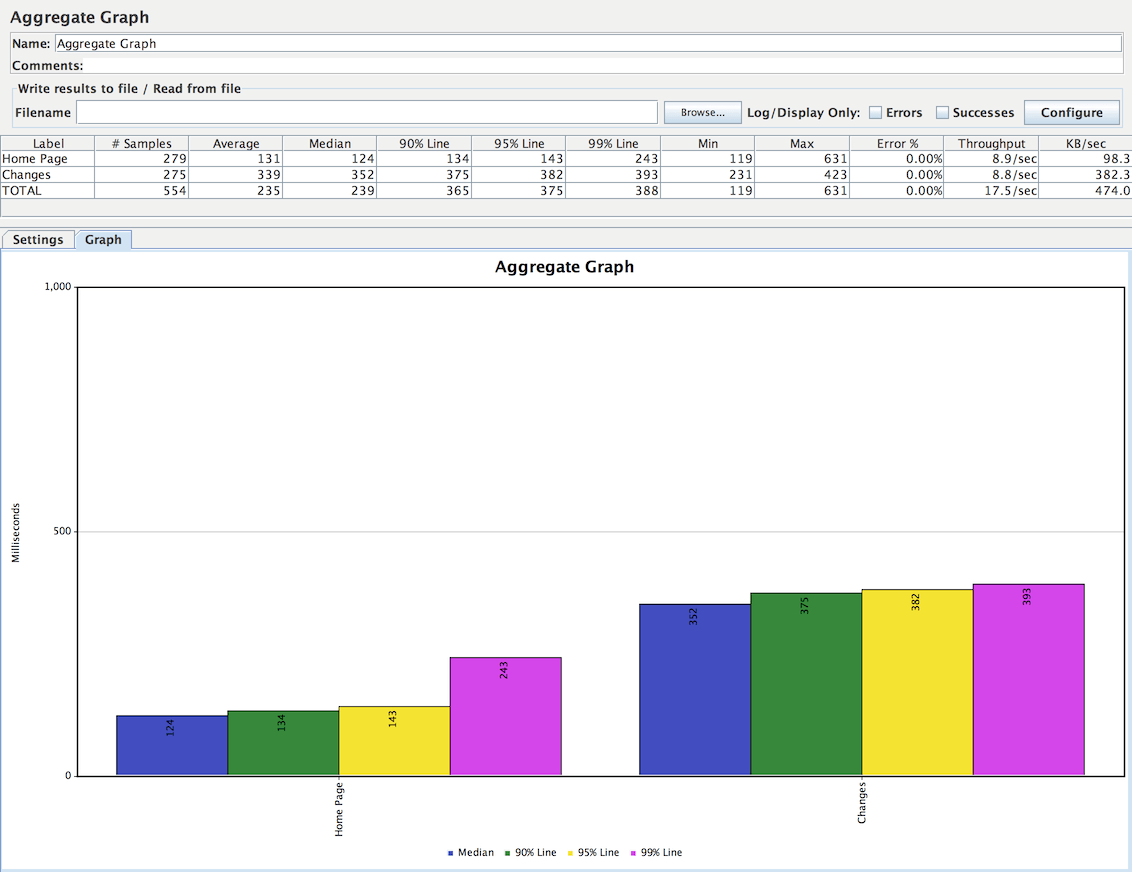

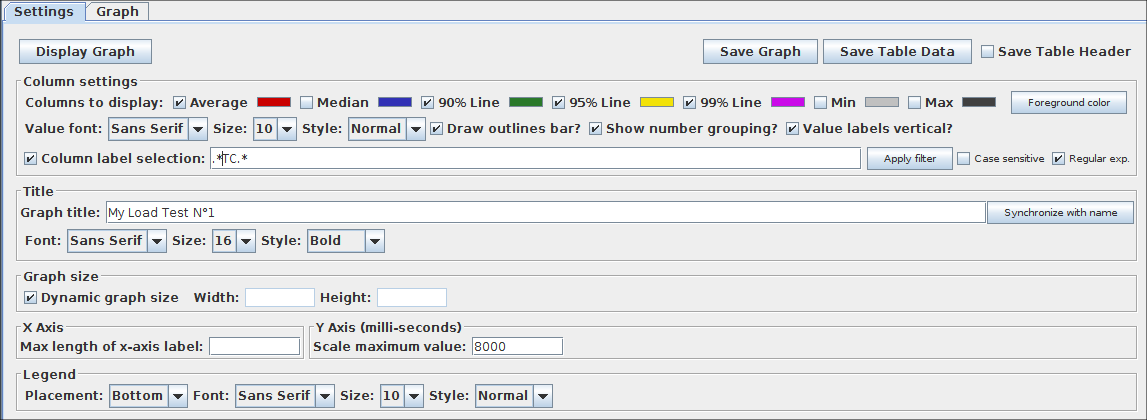

- Aggregate Graph

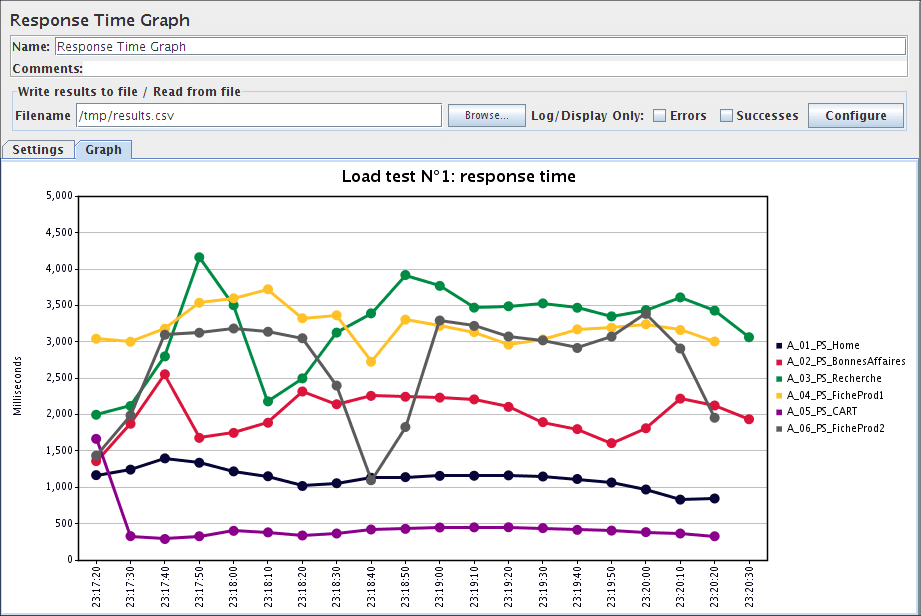

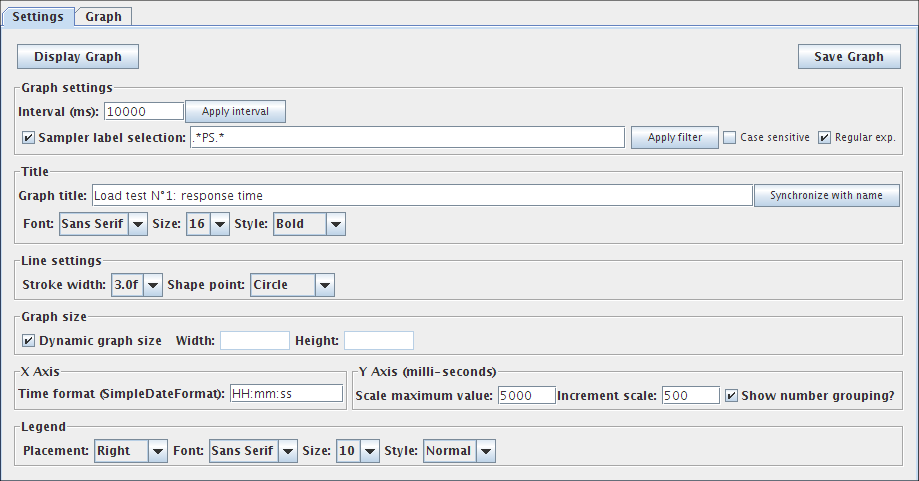

- Response Time Graph

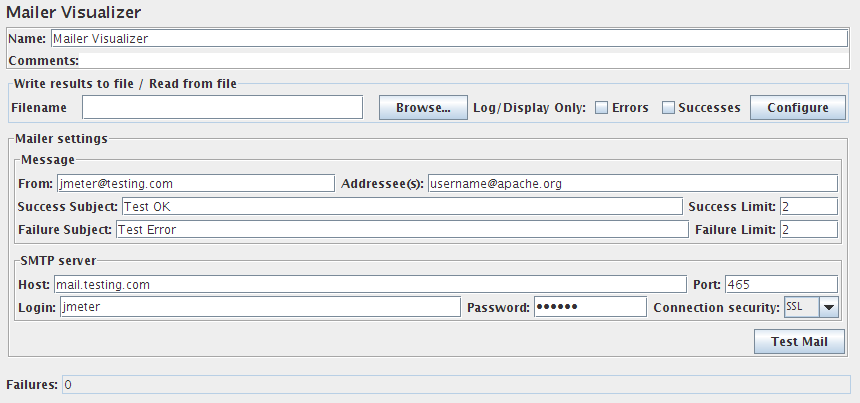

- Mailer Visualizer

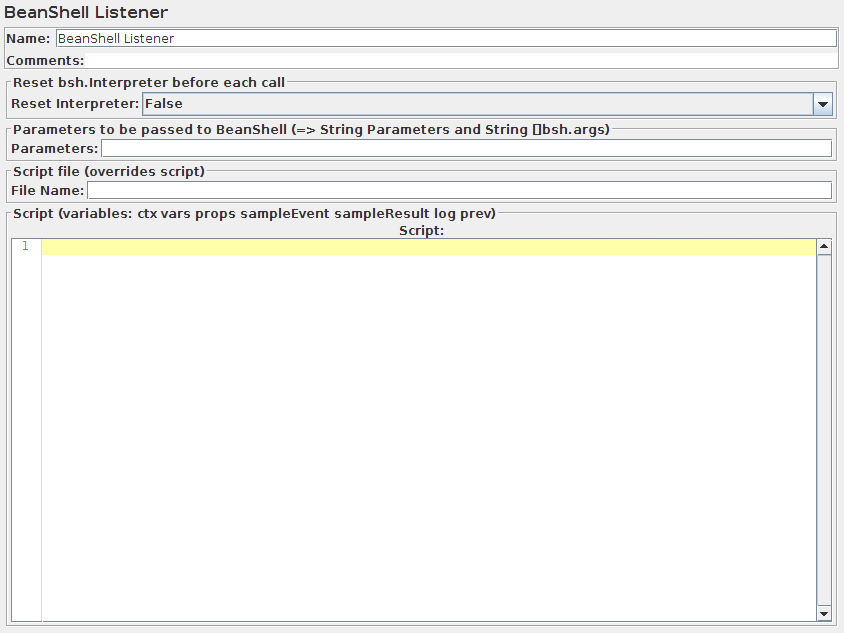

- BeanShell Listener

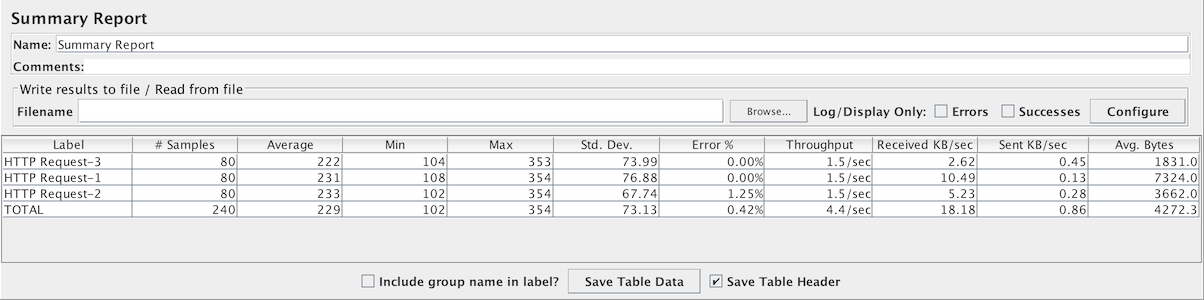

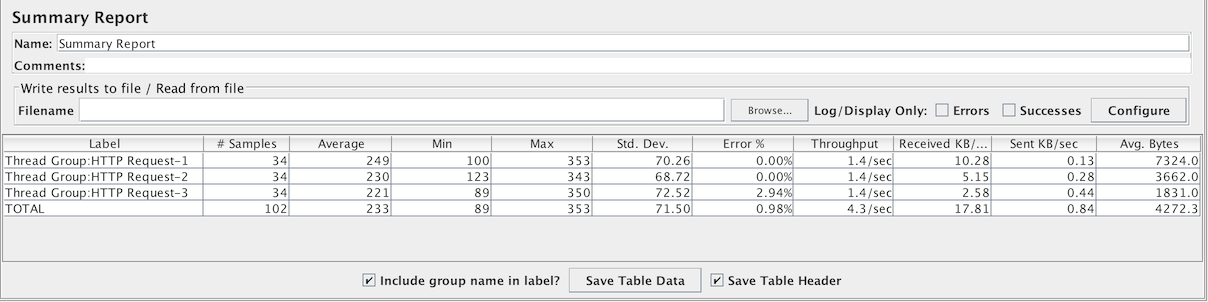

- Summary Report

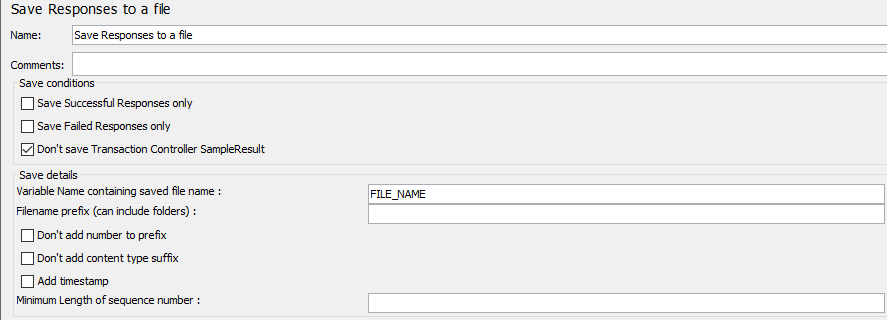

- Save Responses to a file

- JSR223 Listener

- Generate Summary Results

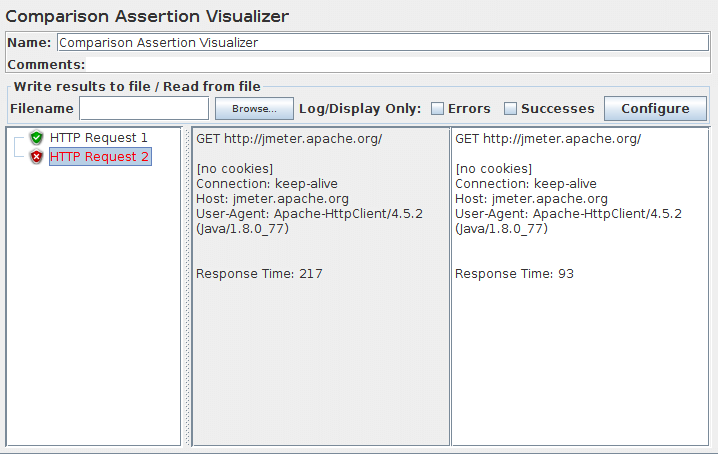

- Comparison Assertion Visualizer

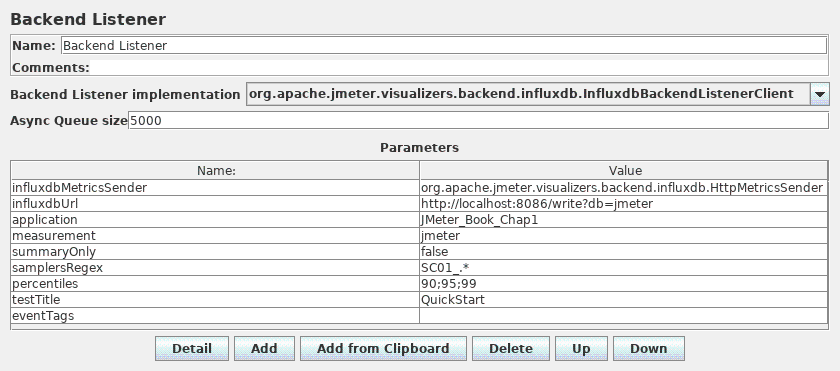

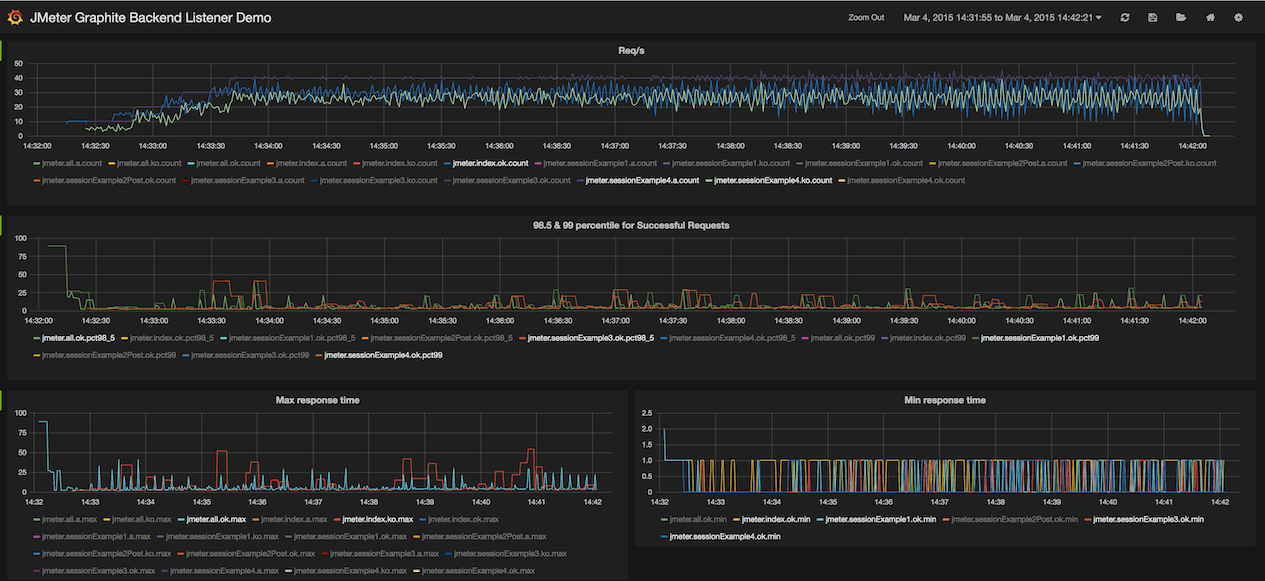

- Backend Listener

-

18.4 Configuration Elements

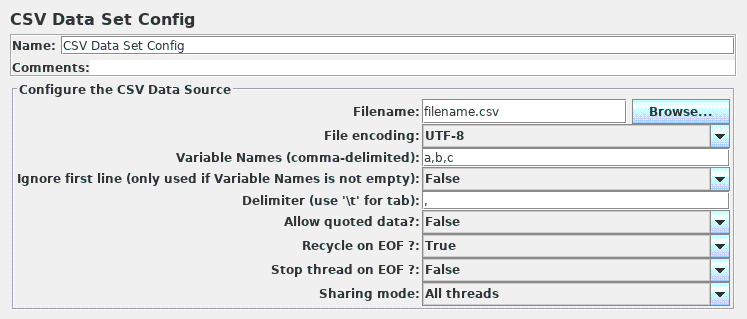

- CSV Data Set Config

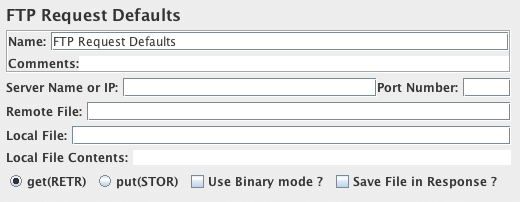

- FTP Request Defaults

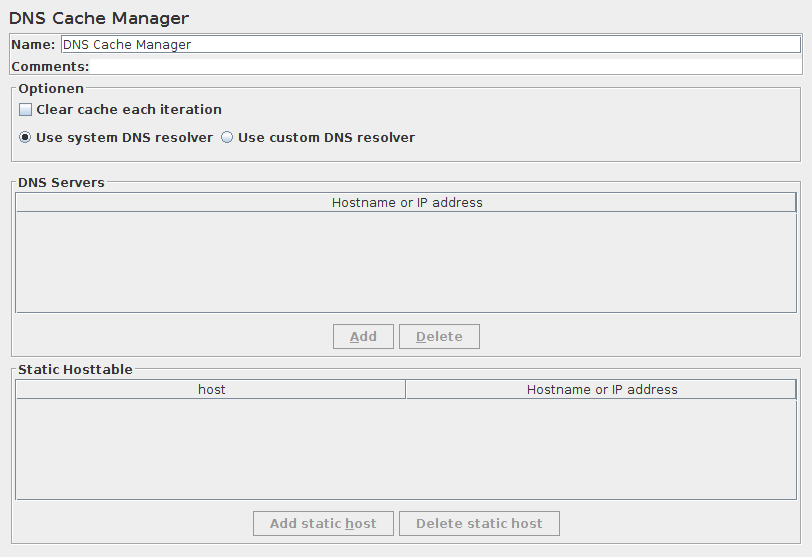

- DNS Cache Manager

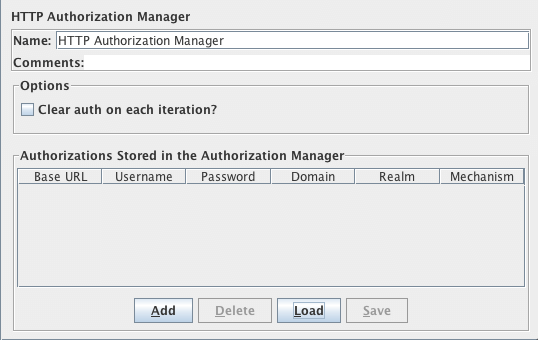

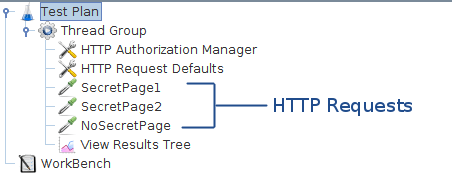

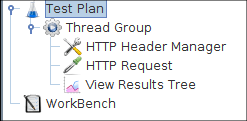

- HTTP Authorization Manager

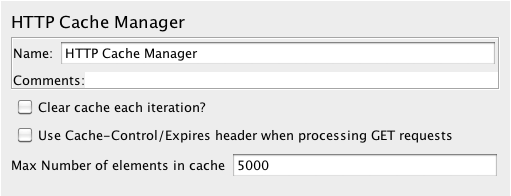

- HTTP Cache Manager

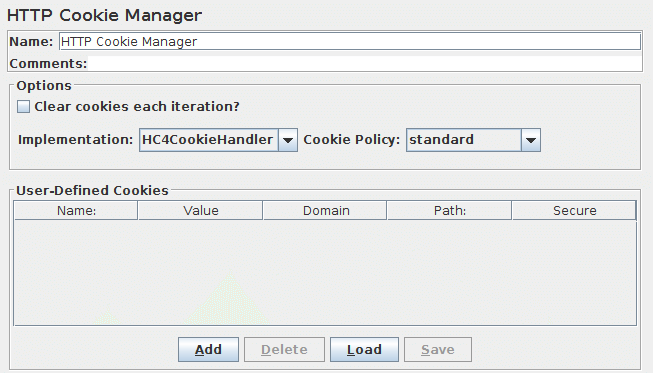

- HTTP Cookie Manager

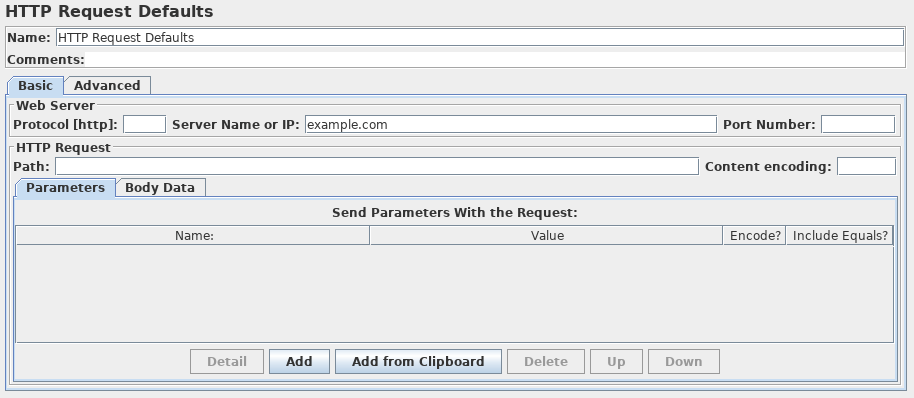

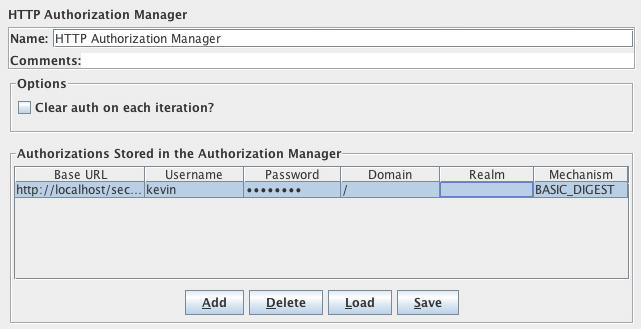

- HTTP Request Defaults

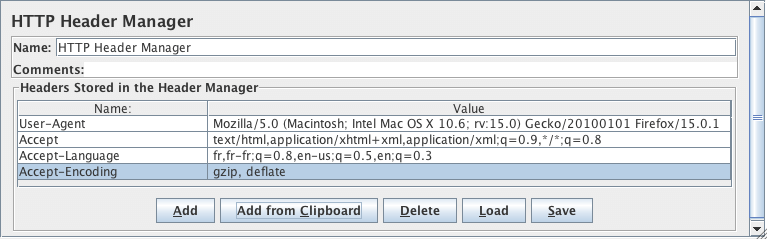

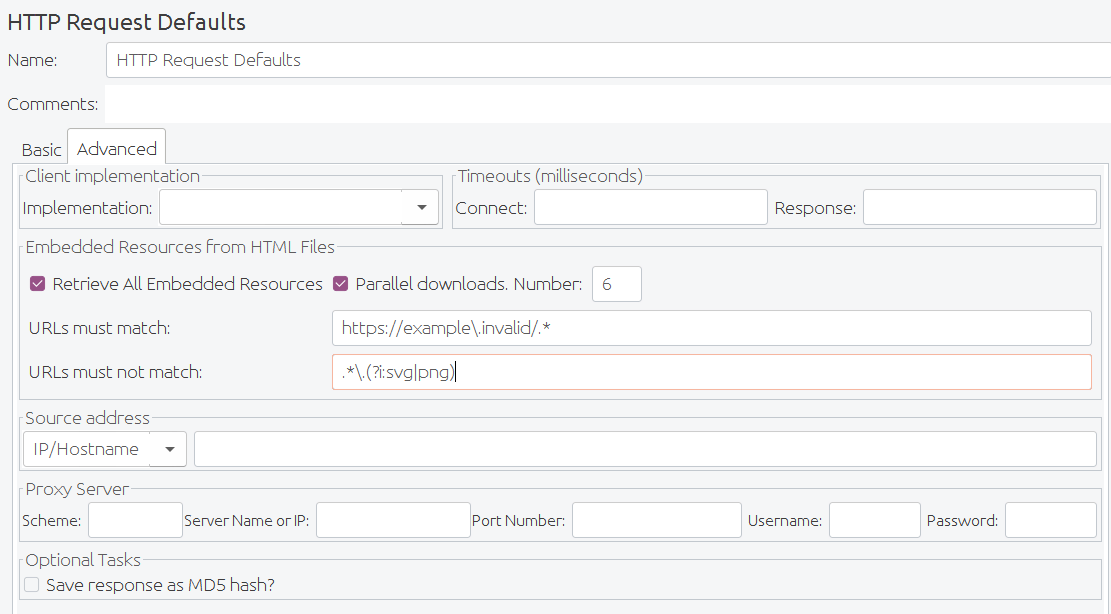

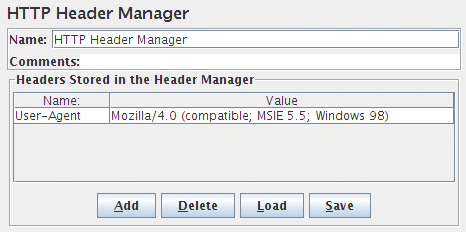

- HTTP Header Manager

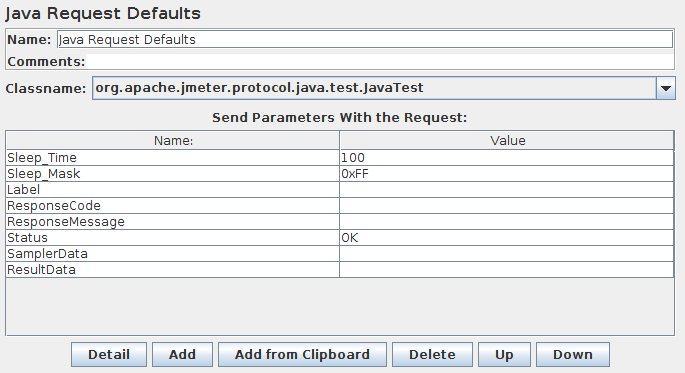

- Java Request Defaults

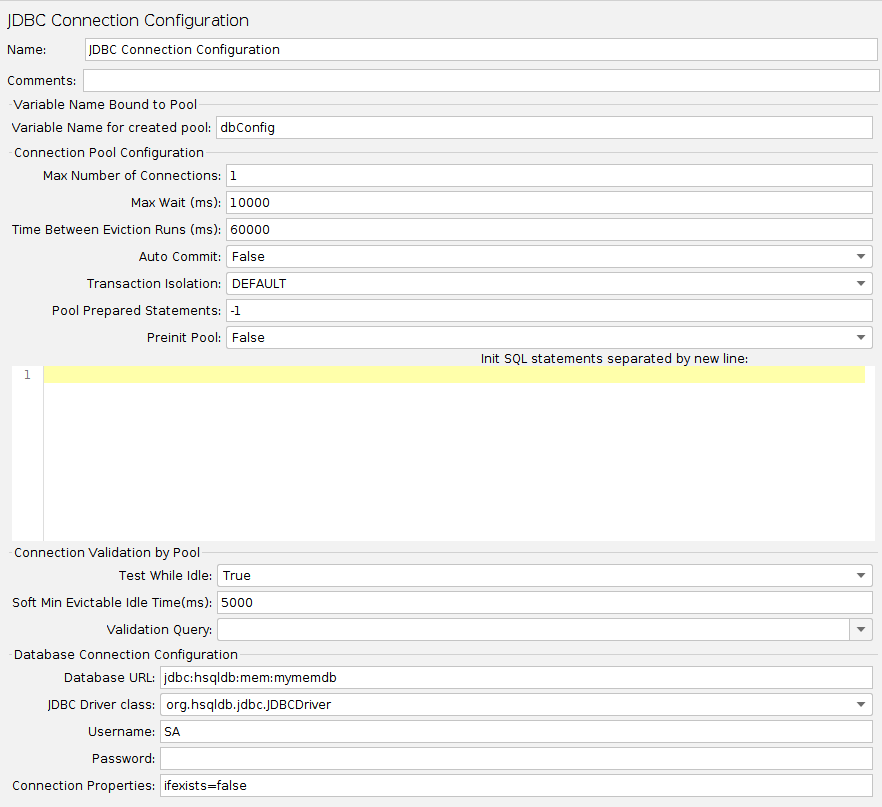

- JDBC Connection Configuration

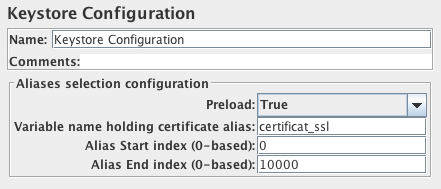

- Keystore Configuration

- Login Config Element

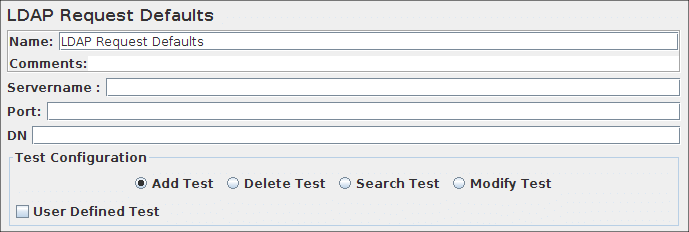

- LDAP Request Defaults

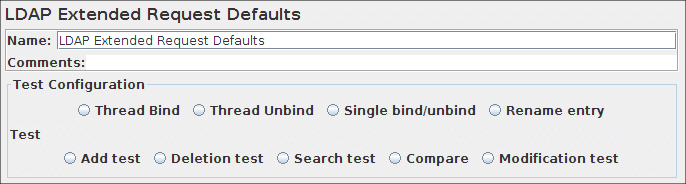

- LDAP Extended Request Defaults

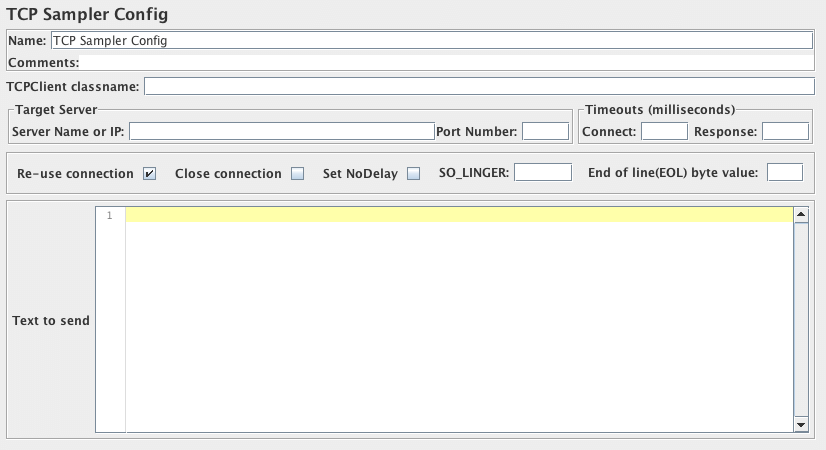

- TCP Sampler Config

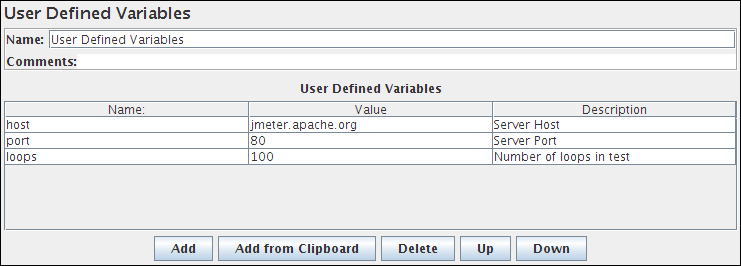

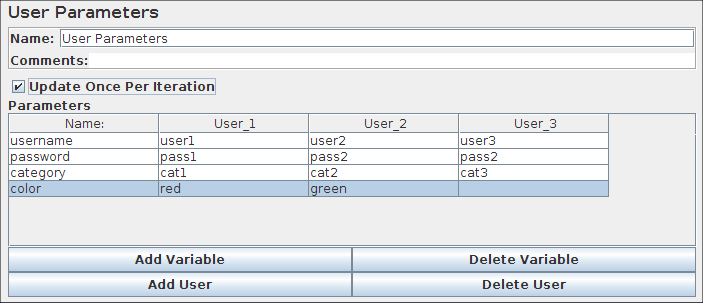

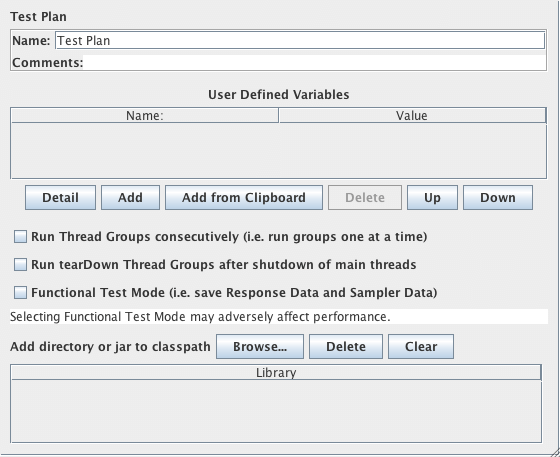

- User Defined Variables

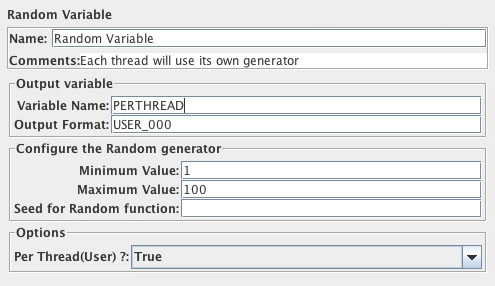

- Random Variable

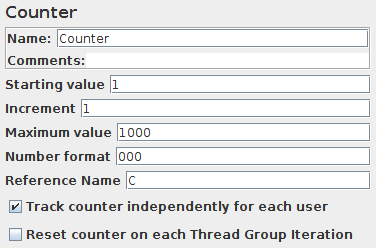

- Counter

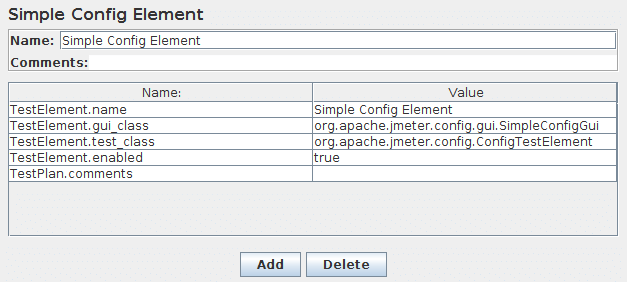

- Simple Config Element

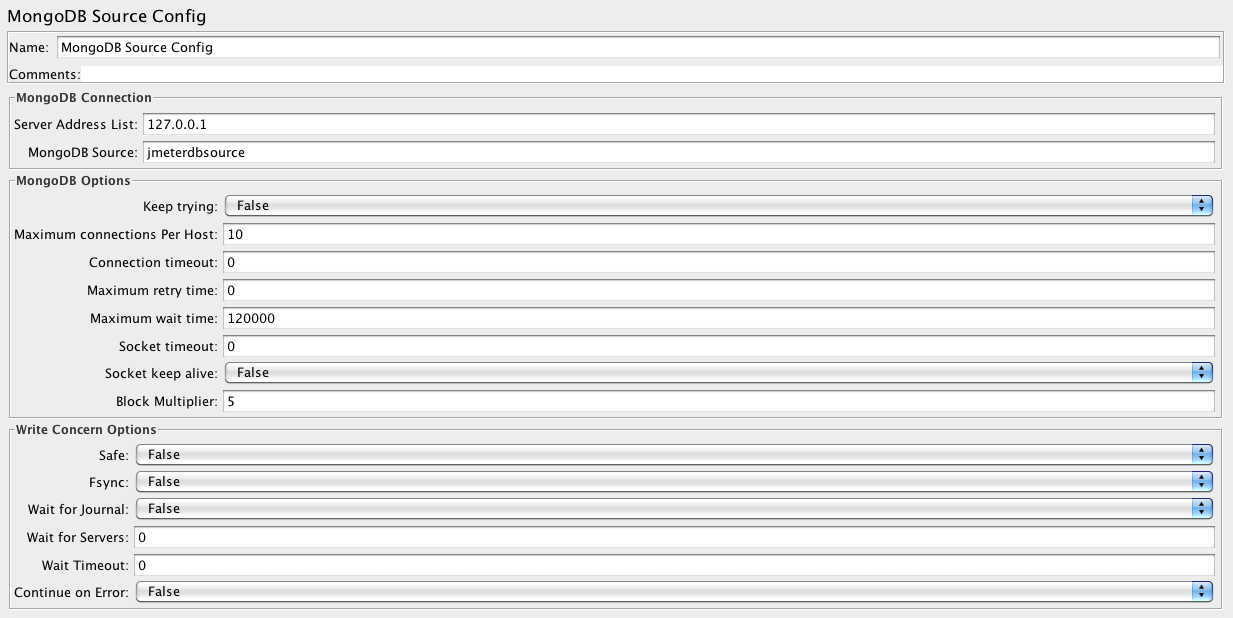

- MongoDB Source Config (DEPRECATED)

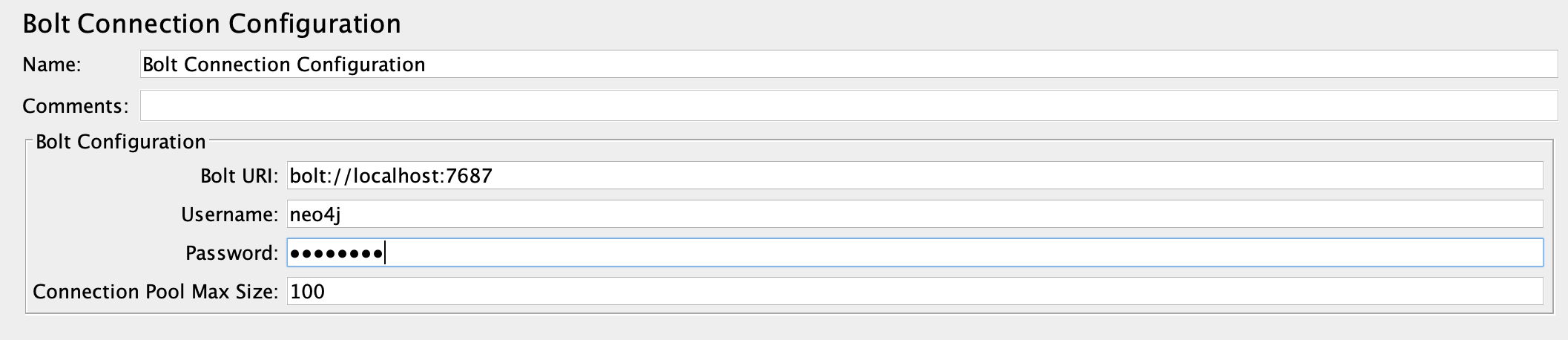

- Bolt Connection Configuration

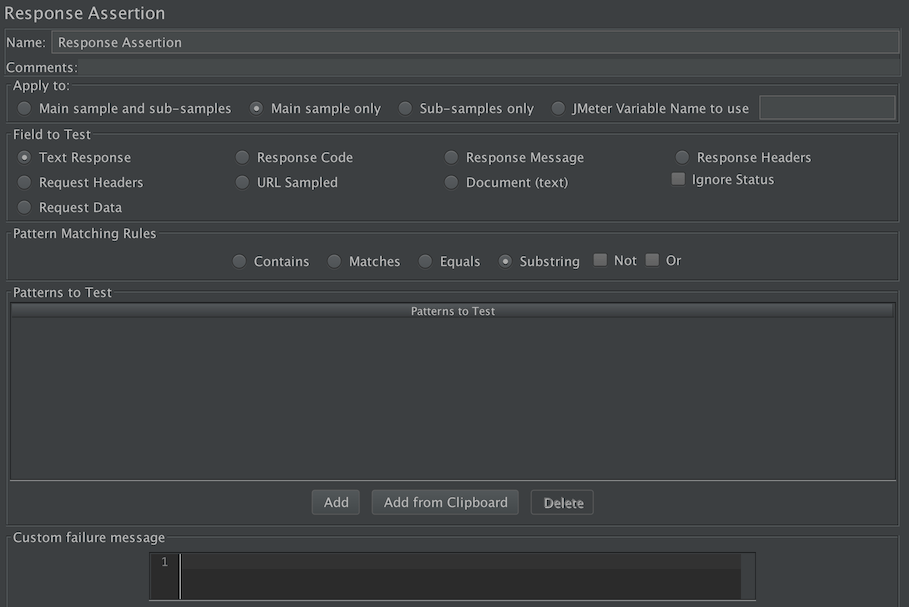

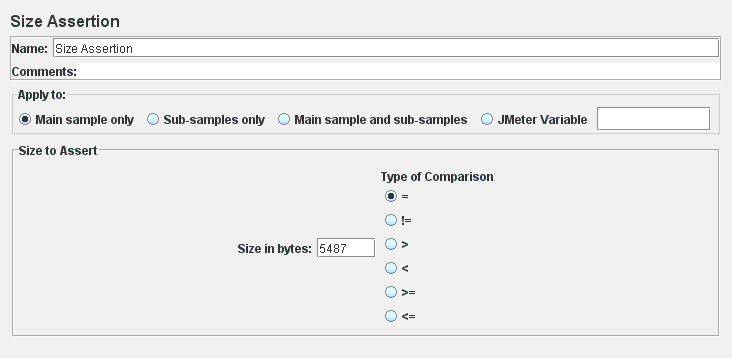

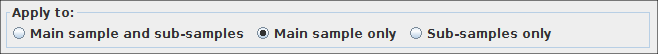

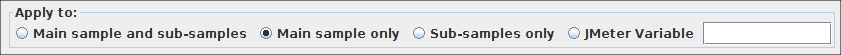

- 18.5 Assertions

- 18.6 Timers

- 18.7 Pre Processors

- 18.8 Post-Processors

- 18.9 Miscellaneous Features

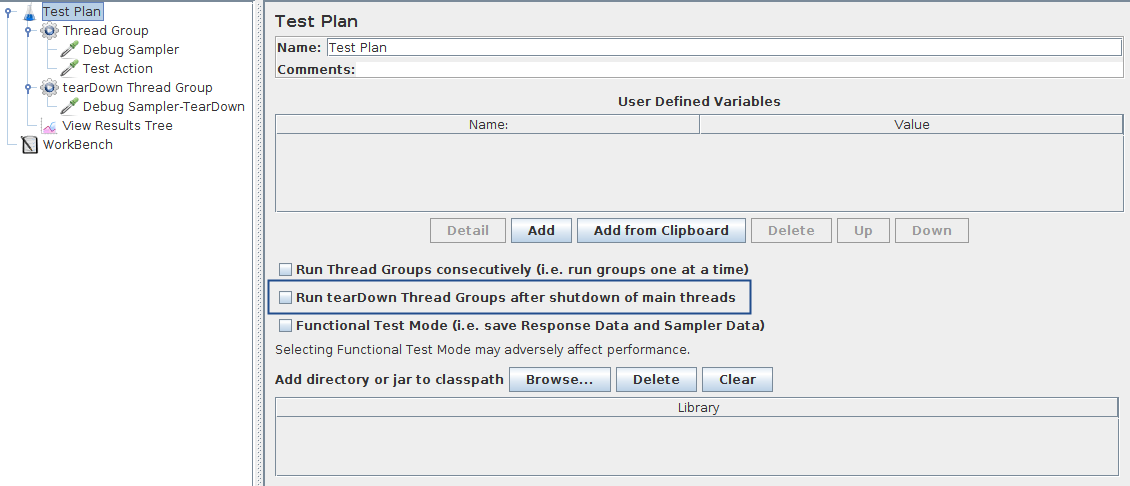

18 Introduction¶

18.1 Samplers¶

Samplers perform the actual work of JMeter. Each sampler (except Flow Control Action) generates one or more sample results. The sample results have various attributes (success/fail, elapsed time, data size etc.) and can be viewed in the various listeners.

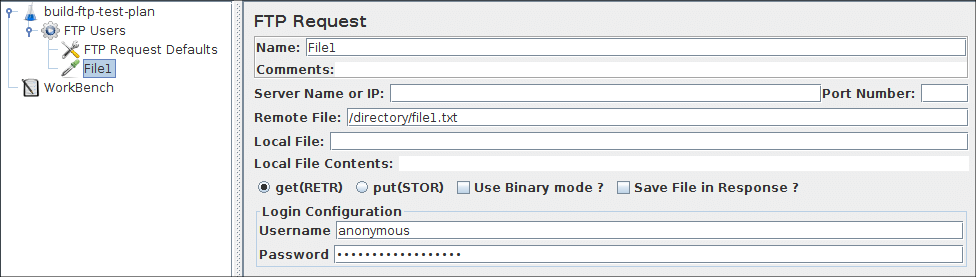

FTP Request¶

Latency is set to the time it takes to login.

Parameters ¶

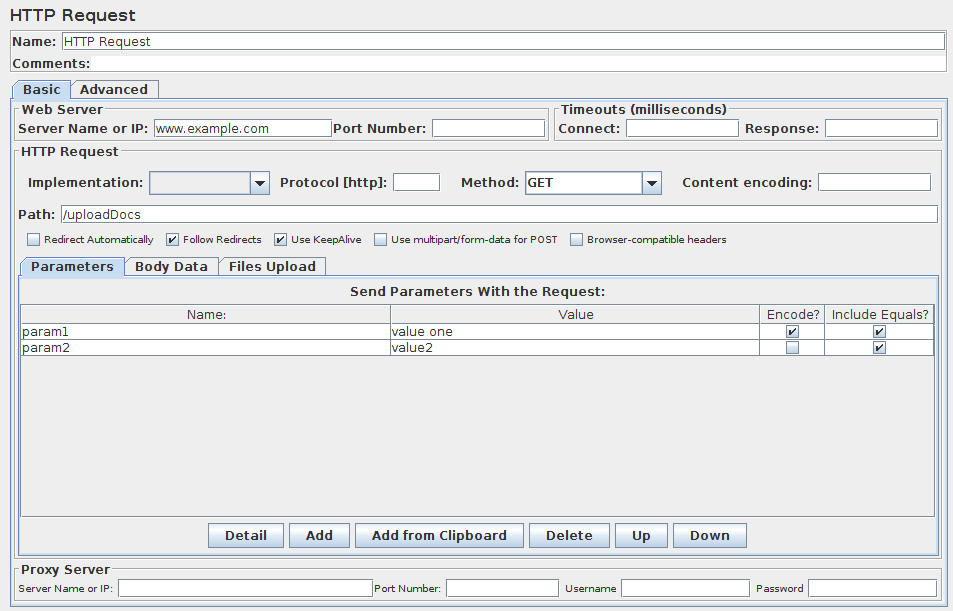

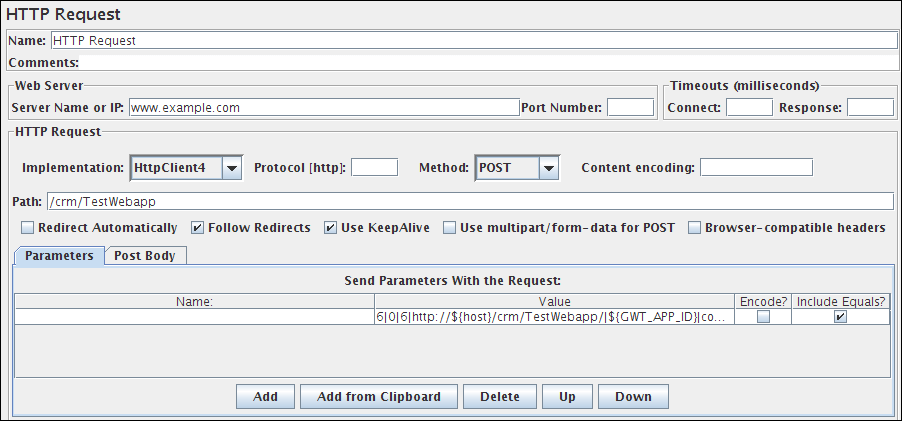

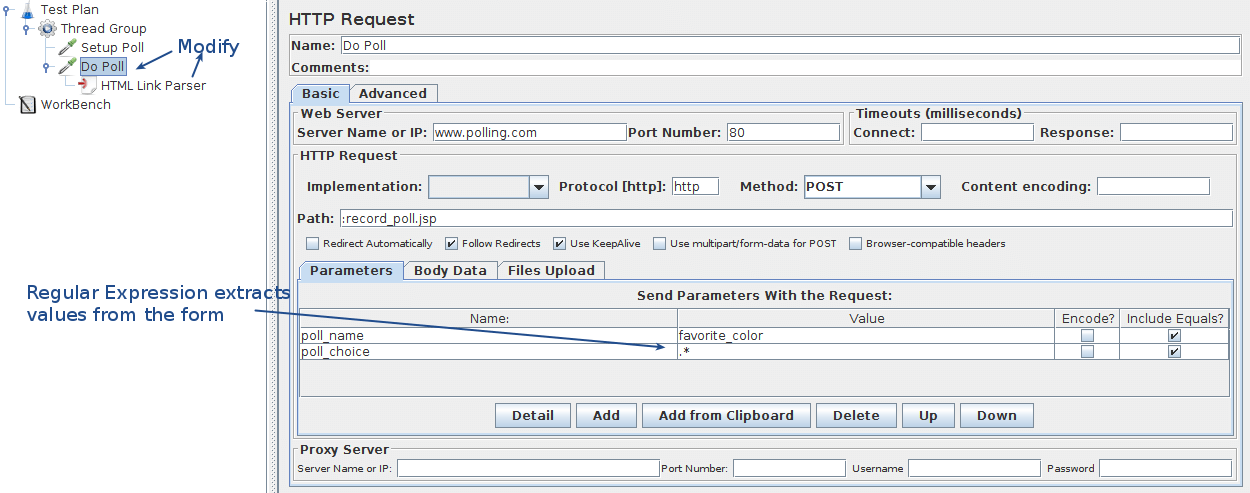

HTTP Request¶

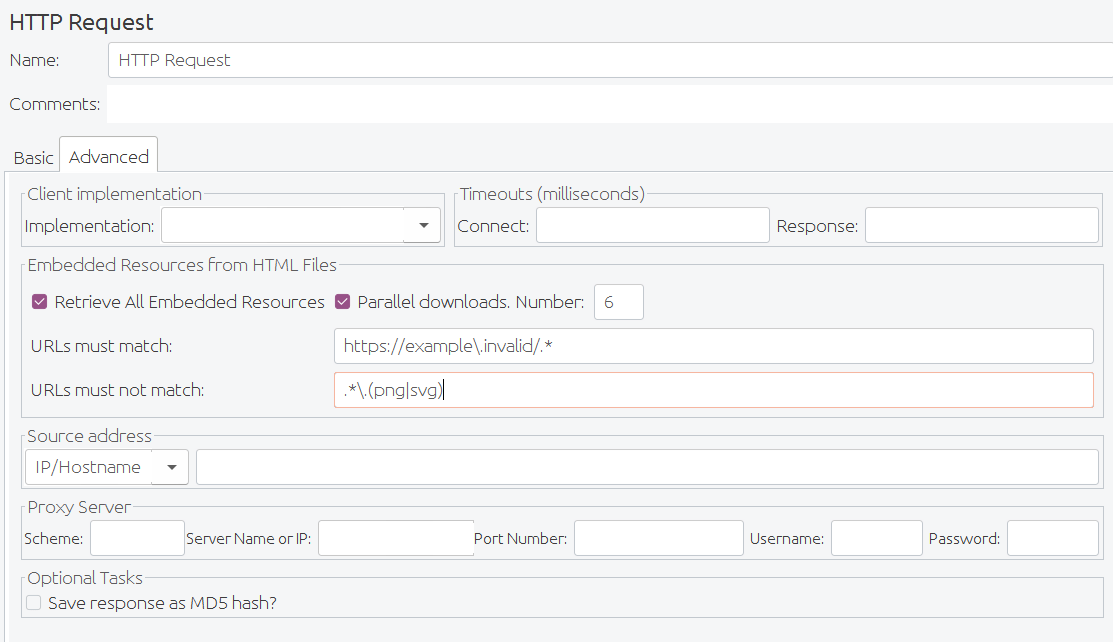

This sampler lets you send an HTTP/HTTPS request to a web server. It also lets you control whether or not JMeter parses HTML files for images and other embedded resources and sends HTTP requests to retrieve them. The following types of embedded resource are retrieved:

- images

- applets

- stylesheets (CSS) and resources referenced from those files

- external scripts

- frames, iframes

- background images (body, table, TD, TR)

- background sound

The default parser is org.apache.jmeter.protocol.http.parser.LagartoBasedHtmlParser. This can be changed by using the property "htmlparser.className" - see jmeter.properties for details.

If you are going to send multiple requests to the same web server, consider using an HTTP Request Defaults Configuration Element so you do not have to enter the same information for each HTTP Request.

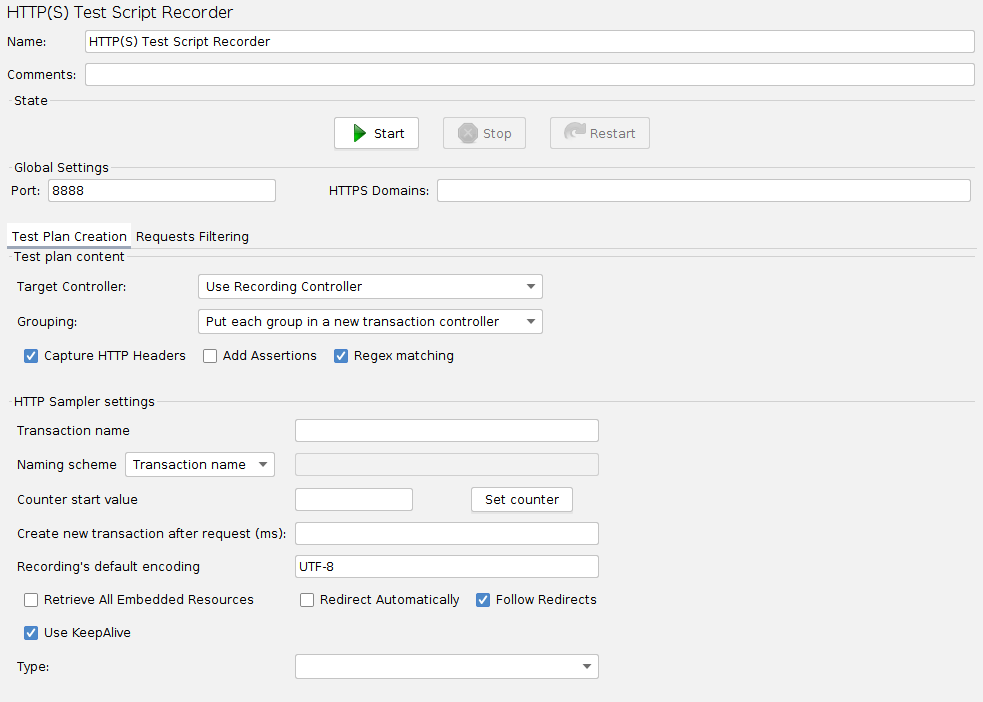

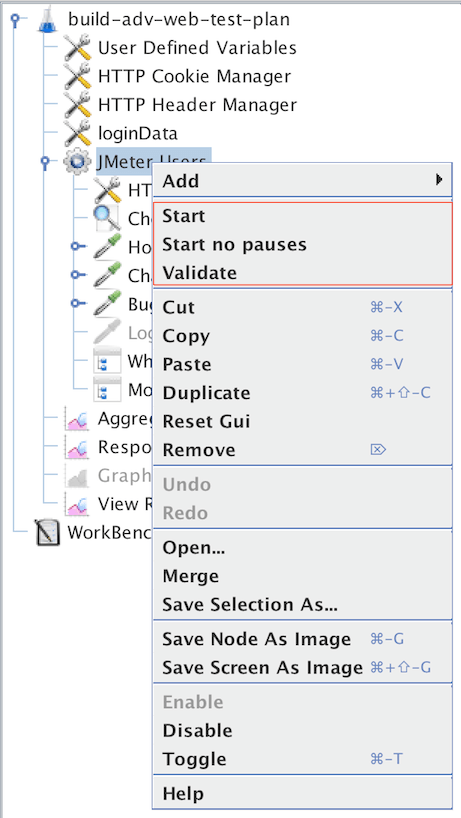

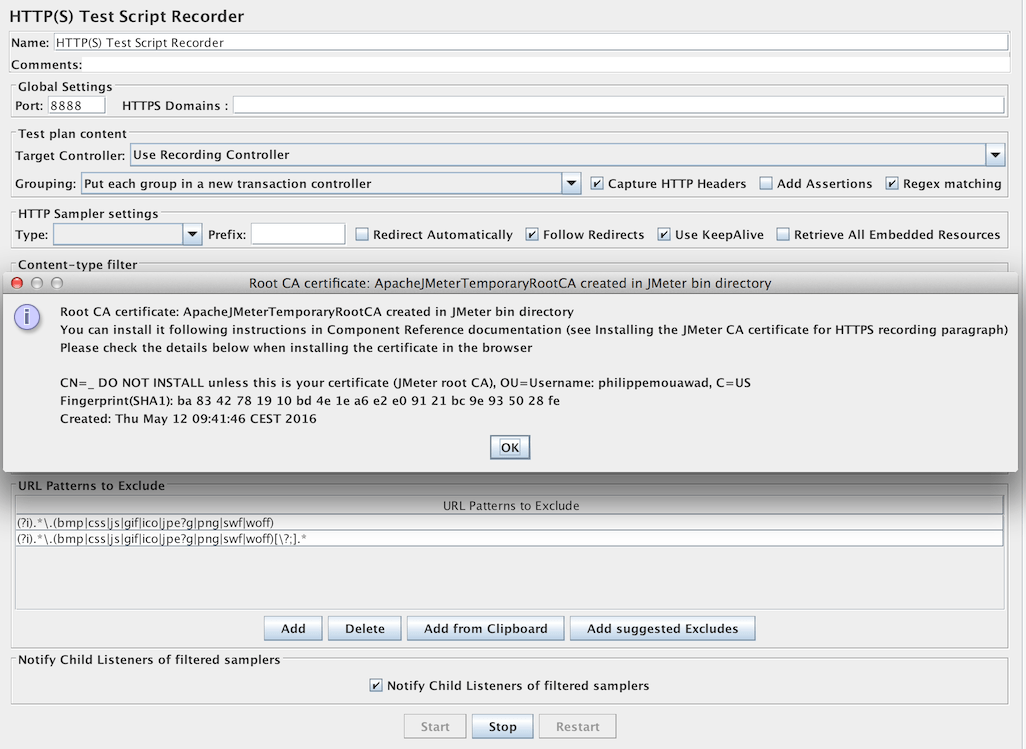

Or, instead of manually adding HTTP Requests, you may want to use JMeter's HTTP(S) Test Script Recorder to create them. This can save you time if you have a lot of HTTP requests or requests with many parameters.

There are three different test elements used to define the samplers:

- AJP/1.3 Sampler

- uses the Tomcat mod_jk protocol (allows testing of Tomcat in AJP mode without needing Apache httpd) The AJP Sampler does not support multiple file upload; only the first file will be used.

- HTTP Request

-

this has an implementation drop-down box, which selects the HTTP protocol implementation to be used:

- Java

- uses the HTTP implementation provided by the JVM. This has some limitations in comparison with the HttpClient implementations - see below.

- HTTPClient4

- uses Apache HttpComponents HttpClient 4.x.

- Blank Value

- does not set implementation on HTTP Samplers, so relies on HTTP Request Defaults if present or on jmeter.httpsampler property defined in jmeter.properties

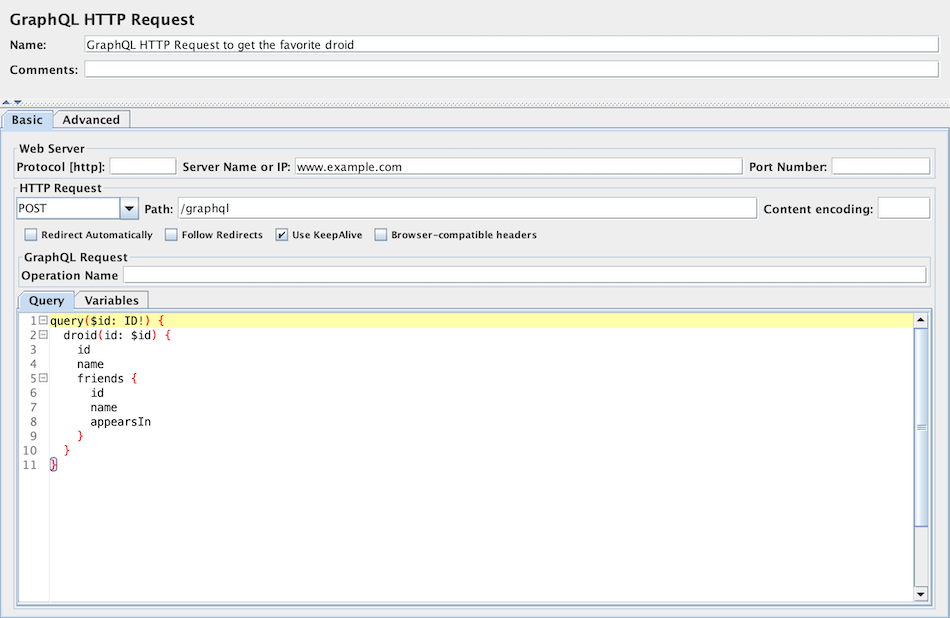

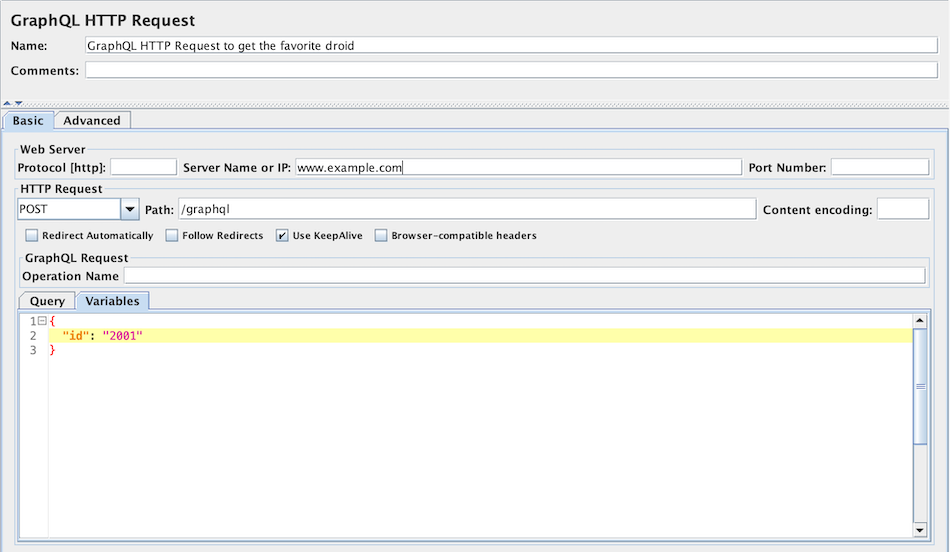

- GraphQL HTTP Request

-

this is a GUI variation of the HTTP Request to provide more convenient UI elements

to view or edit GraphQL Query, Variables and Operation Name, while converting them into HTTP Arguments automatically under the hood

using the same sampler.

This hides or customizes the following UI elements as they are less convenient for or irrelevant to GraphQL over HTTP/HTTPS requests:

- Method: Only POST and GET methods are available conforming the GraphQL over HTTP specification. POST method is selected by default.

- Parameters and Post Body tabs: you may view or edit parameter content through Query, Variables and Operation Name UI elements instead.

- File Upload tab: irrelevant to GraphQL queries.

- Embedded Resources from HTML Files section in the Advanced tab: irrelevant in GraphQL JSON responses.

The Java HTTP implementation has some limitations:

- There is no control over how connections are re-used. When a connection is released by JMeter, it may or may not be re-used by the same thread.

- The API is best suited to single-threaded usage - various settings are defined via system properties, and therefore apply to all connections.

- No support of Kerberos authentication

- It does not support client based certificate testing with Keystore Config.

- Better control of Retry mechanism

- It does not support virtual hosts.

- It supports only the following methods: GET, POST, HEAD, OPTIONS, PUT, DELETE and TRACE

- Better control on DNS Caching with DNS Cache Manager

If the request requires server or proxy login authorization (i.e. where a browser would create a pop-up dialog box), you will also have to add an HTTP Authorization Manager Configuration Element. For normal logins (i.e. where the user enters login information in a form), you will need to work out what the form submit button does, and create an HTTP request with the appropriate method (usually POST) and the appropriate parameters from the form definition. If the page uses HTTP, you can use the JMeter Proxy to capture the login sequence.

A separate SSL context is used for each thread. If you want to use a single SSL context (not the standard behaviour of browsers), set the JMeter property:

https.sessioncontext.shared=trueBy default, since version 5.0, the SSL context is retained during a Thread Group iteration and reset for each test iteration. If in your test plan the same user iterates multiple times, then you should set this to false.

httpclient.reset_state_on_thread_group_iteration=true

https.default.protocol=SSLv3

JMeter also allows one to enable additional protocols, by changing the property https.socket.protocols.

If the request uses cookies, then you will also need an HTTP Cookie Manager. You can add either of these elements to the Thread Group or the HTTP Request. If you have more than one HTTP Request that needs authorizations or cookies, then add the elements to the Thread Group. That way, all HTTP Request controllers will share the same Authorization Manager and Cookie Manager elements.

If the request uses a technique called "URL Rewriting" to maintain sessions, then see section 6.1 Handling User Sessions With URL Rewriting for additional configuration steps.

Parameters ¶

- it is provided by HTTP Request Defaults

- or a full URL including scheme, host and port (scheme://host:port) is set in Path field

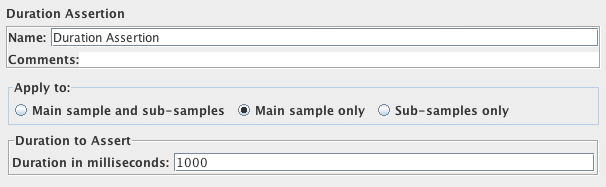

A Duration Assertion can be used to detect responses that take too long to complete.

More methods can be pre-defined for the HttpClient4 by using the JMeter property httpsampler.user_defined_methods.

"Redirect requested but followRedirects is disabled"This can be ignored.

JMeter will collapse paths of the form '/../segment' in both absolute and relative redirect URLs. For example http://host/one/../two will be collapsed into http://host/two. If necessary, this behaviour can be suppressed by setting the JMeter property httpsampler.redirect.removeslashdotdot=false

Additionally, you can specify whether each parameter should be URL encoded. If you are not sure what this means, it is probably best to select it. If your values contain characters such as the following then encoding is usually required.:

- ASCII Control Chars

- Non-ASCII characters

- Reserved characters:URLs use some characters for special use in defining their syntax. When these characters are not used in their special role inside a URL, they need to be encoded, example: '$', '&', '+', ',' , '/', ':', ';', '=', '?', '@'

- Unsafe characters: Some characters present the possibility of being misunderstood within URLs for various reasons. These characters should also always be encoded, example: ' ', '<', '>', '#', '%', …

When MIME Type is empty, JMeter will try to guess the MIME type of the given file.

If it is a POST or PUT or PATCH request and there is a single file whose 'Parameter name' attribute (below) is omitted, then the file is sent as the entire body of the request, i.e. no wrappers are added. This allows arbitrary bodies to be sent. This functionality is present for POST requests, and also for PUT requests. See below for some further information on parameter handling.

To distinguish the source address value, select the type of these:

- Select IP/Hostname to use a specific IP address or a (local) hostname

- Select Device to pick the first available address for that interface which this may be either IPv4 or IPv6

- Select Device IPv4 to select the IPv4 address of the device name (like eth0, lo, em0, etc.)

- Select Device IPv6 to select the IPv6 address of the device name (like eth0, lo, em0, etc.)

This property is used to enable IP Spoofing. It overrides the default local IP address for this sample. The JMeter host must have multiple IP addresses (i.e. IP aliases, network interfaces, devices). The value can be a host name, IP address, or a network interface device such as "eth0" or "lo" or "wlan0".

If the property httpclient.localaddress is defined, that is used for all HttpClient requests.

The following parameters are available only for GraphQL HTTP Request:

Parameters ¶

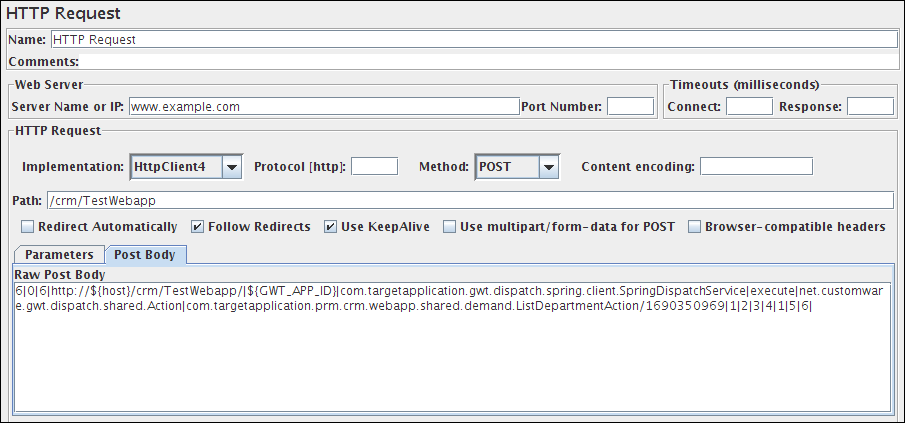

Parameter Handling:

For the POST and PUT method, if there is no file to send, and the name(s) of the parameter(s) are omitted,

then the body is created by concatenating all the value(s) of the parameters.

Note that the values are concatenated without adding any end-of-line characters.

These can be added by using the __char() function in the value fields.

This allows arbitrary bodies to be sent.

The values are encoded if the encoding flag is set.

See also the MIME Type above how you can control the content-type request header that is sent.

For other methods, if the name of the parameter is missing,

then the parameter is ignored. This allows the use of optional parameters defined by variables.

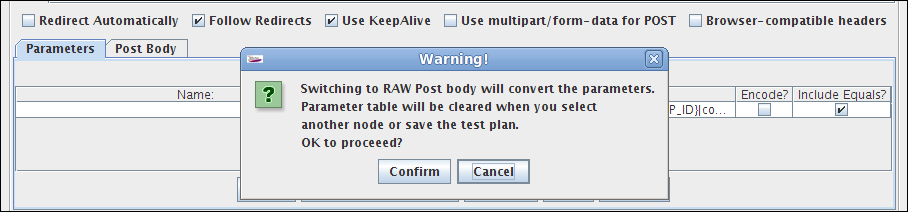

You have the option to switch to Body Data tab when a request has only unnamed parameters (or no parameters at all). This option is useful in the following cases (amongst others):

- GWT RPC HTTP Request

- JSON REST HTTP Request

- XML REST HTTP Request

- SOAP HTTP Request

In Body Data mode, each line will be sent with CRLF appended, apart from the last line. To send a CRLF after the last line of data, just ensure that there is an empty line following it. (This cannot be seen, except by noting whether the cursor can be placed on the subsequent line.)

Method Handling:

The GET, DELETE, POST, PUT and PATCH request methods work similarly, except that as of 3.1, only POST method supports multipart requests

or file upload.

The PUT and PATCH method body must be provided as one of the following:

- define the body as a file with empty Parameter name field; in which case the MIME Type is used as the Content-Type

- define the body as parameter value(s) with no name

- use the Body Data tab

The GET, DELETE and POST methods have an additional way of passing parameters by using the Parameters tab. GET, DELETE, PUT and PATCH require a Content-Type. If not using a file, attach a Header Manager to the sampler and define the Content-Type there.

JMeter scan responses from embedded resources. It uses the property HTTPResponse.parsers, which is a list of parser ids, e.g. htmlParser, cssParser and wmlParser. For each id found, JMeter checks two further properties:

- id.types - a list of content types

- id.className - the parser to be used to extract the embedded resources

See jmeter.properties file for the details of the settings. If the HTTPResponse.parser property is not set, JMeter reverts to the previous behaviour, i.e. only text/html responses will be scanned

Emulating slow connections:HttpClient4 and Java Sampler support emulation of slow connections; see the following entries in jmeter.properties:

# Define characters per second > 0 to emulate slow connections #httpclient.socket.http.cps=0 #httpclient.socket.https.cps=0However the Java sampler only supports slow HTTPS connections.

Response size calculation

The HttpClient4 implementation does include the overhead in the response body size, so the value may be greater than the number of bytes in the response content.

Retry handling

By default retry has been set to 0 for both HttpClient4 and Java implementations, meaning no retry is attempted.

For HttpClient4, the retry count can be overridden by setting the relevant JMeter property, for example:

httpclient4.retrycount=3

httpclient4.request_sent_retry_enabled=true

http.java.sampler.retries=3

Note: Certificates does not conform to algorithm constraints

You may encounter the following error: java.security.cert.CertificateException: Certificates does not conform to algorithm constraints

if you run a HTTPS request on a web site with a SSL certificate (itself or one of SSL certificates in its chain of trust) with a signature

algorithm using MD2 (like md2WithRSAEncryption) or with a SSL certificate with a size lower than 1024 bits.

This error is related to increased security in Java 8.

To allow you to perform your HTTPS request, you can downgrade the security of your Java installation by editing the Java jdk.certpath.disabledAlgorithms property. Remove the MD2 value or the constraint on size, depending on your case.

This property is in this file:

JAVA_HOME/jre/lib/security/java.security

See Bug 56357 for details.

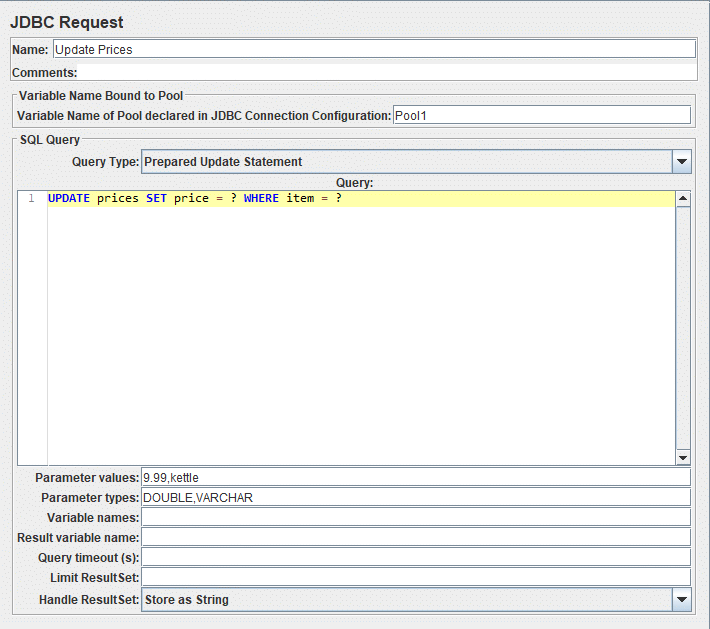

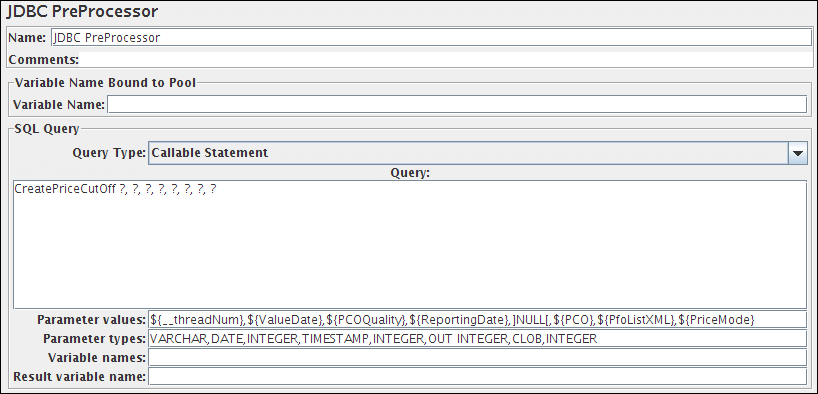

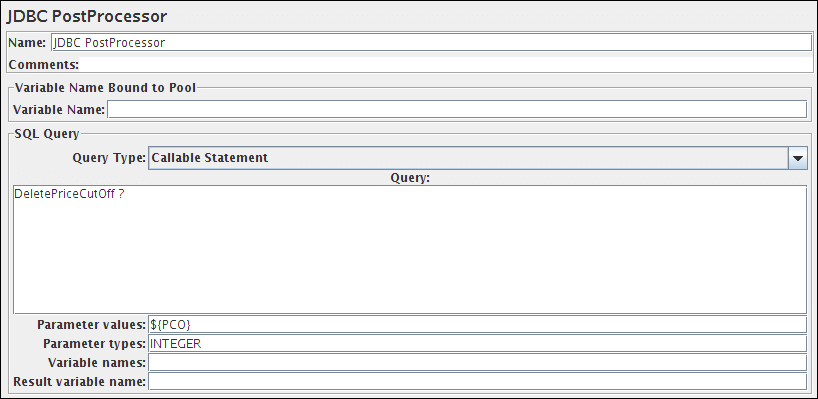

JDBC Request¶

This sampler lets you send a JDBC Request (an SQL query) to a database.

Before using this you need to set up a JDBC Connection Configuration Configuration element

If the Variable Names list is provided, then for each row returned by a Select statement, the variables are set up with the value of the corresponding column (if a variable name is provided), and the count of rows is also set up. For example, if the Select statement returns 2 rows of 3 columns, and the variable list is A,,C, then the following variables will be set up:

A_#=2 (number of rows) A_1=column 1, row 1 A_2=column 1, row 2 C_#=2 (number of rows) C_1=column 3, row 1 C_2=column 3, row 2

If the Select statement returns zero rows, then the A_# and C_# variables would be set to 0, and no other variables would be set.

Old variables are cleared if necessary - e.g. if the first select retrieves six rows and a second select returns only three rows, the additional variables for rows four, five and six will be removed.

Parameters ¶

- Select Statement

- Update Statement - use this for Inserts and Deletes as well

- Callable Statement

- Prepared Select Statement

- Prepared Update Statement - use this for Inserts and Deletes as well

- Commit

- Rollback

- Autocommit(false)

- Autocommit(true)

- Edit - this should be a variable reference that evaluates to one of the above

- select * from t_customers where id=23

-

CALL SYSCS_UTIL.SYSCS_EXPORT_TABLE (null, ?, ?, null, null, null)

- Parameter values: tablename,filename

- Parameter types: VARCHAR,VARCHAR

The list must be enclosed in double-quotes if any of the values contain a comma or double-quote, and any embedded double-quotes must be doubled-up, for example:

"Dbl-Quote: "" and Comma: ,"

These are defined as fields in the class java.sql.Types, see for example:

Javadoc for java.sql.Types.

If not specified, "IN" is assumed, i.e. "DATE" is the same as "IN DATE".

If the type is not one of the fields found in java.sql.Types, JMeter also accepts the corresponding integer number, e.g. since OracleTypes.CURSOR == -10, you can use "INOUT -10".

There must be as many types as there are placeholders in the statement.

columnValue = vars.getObject("resultObject").get(0).get("Column Name");

- Store As String (default) - All variables on Variable Names list are stored as strings, will not iterate through a ResultSet when present on the list. CLOBs will be converted to Strings. BLOBs will be converted to Strings as if they were an UTF-8 encoded byte-array. Both CLOBs and BLOBs will be cut off after jdbcsampler.max_retain_result_size bytes.

- Store As Object - Variables of ResultSet type on Variables Names list will be stored as Object and can be accessed in subsequent tests/scripts and iterated, will not iterate through the ResultSet. CLOBs will be handled as if Store As String was selected. BLOBs will be stored as a byte array. Both CLOBs and BLOBs will be cut off after jdbcsampler.max_retain_result_size bytes.

- Count Records - Variables of ResultSet types will be iterated through showing the count of records as result. Variables will be stored as Strings. For BLOBs the size of the object will be stored.

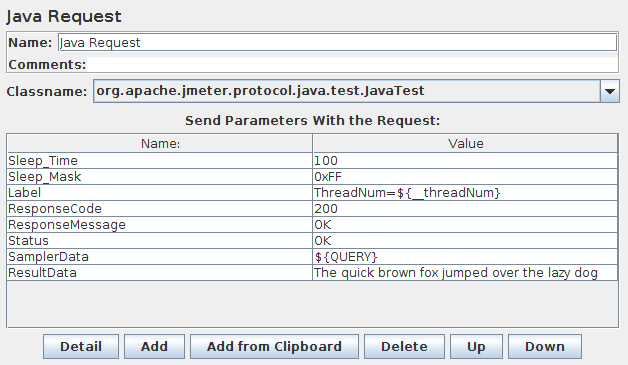

Java Request¶

This sampler lets you control a java class that implements the org.apache.jmeter.protocol.java.sampler.JavaSamplerClient interface. By writing your own implementation of this interface, you can use JMeter to harness multiple threads, input parameter control, and data collection.

The pull-down menu provides the list of all such implementations found by JMeter in its classpath. The parameters can then be specified in the table below - as defined by your implementation. Two simple examples (JavaTest and SleepTest) are provided.

The JavaTest example sampler can be useful for checking test plans, because it allows one to set values in almost all the fields. These can then be used by Assertions, etc. The fields allow variables to be used, so the values of these can readily be seen.

Parameters ¶

The following parameters apply to the SleepTest and JavaTest implementations:

Parameters ¶

The sleep time is calculated as follows:

totalSleepTime = SleepTime + (System.currentTimeMillis() % SleepMask)

The following parameters apply additionally to the JavaTest implementation:

Parameters ¶

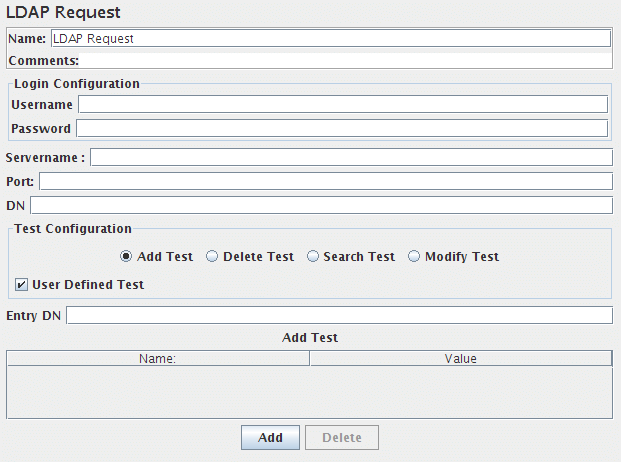

LDAP Request¶

If you are going to send multiple requests to the same LDAP server, consider using an LDAP Request Defaults Configuration Element so you do not have to enter the same information for each LDAP Request.

The same way the Login Config Element also using for Login and password.There are two ways to create test cases for testing an LDAP Server.

- Inbuilt Test cases.

- User defined Test cases.

There are four test scenarios of testing LDAP. The tests are given below:

-

Add Test

-

Inbuilt test:

This will add a pre-defined entry in the LDAP Server and calculate the execution time. After execution of the test, the created entry will be deleted from the LDAP Server.

-

User defined test:

This will add the entry in the LDAP Server. User has to enter all the attributes in the table.The entries are collected from the table to add. The execution time is calculated. The created entry will not be deleted after the test.

-

Inbuilt test:

-

Modify Test

-

Inbuilt test:

This will create a pre-defined entry first, then will modify the created entry in the LDAP Server.And calculate the execution time. After execution of the test, the created entry will be deleted from the LDAP Server.

-

User defined test:

This will modify the entry in the LDAP Server. User has to enter all the attributes in the table. The entries are collected from the table to modify. The execution time is calculated. The entry will not be deleted from the LDAP Server.

-

Inbuilt test:

-

Search Test

-

Inbuilt test:

This will create the entry first, then will search if the attributes are available. It calculates the execution time of the search query. At the end of the execution,created entry will be deleted from the LDAP Server.

-

User defined test:

This will search the user defined entry(Search filter) in the Search base (again, defined by the user). The entries should be available in the LDAP Server. The execution time is calculated.

-

Inbuilt test:

-

Delete Test

-

Inbuilt test:

This will create a pre-defined entry first, then it will be deleted from the LDAP Server. The execution time is calculated.

-

User defined test:

This will delete the user-defined entry in the LDAP Server. The entries should be available in the LDAP Server. The execution time is calculated.

-

Inbuilt test:

Parameters ¶

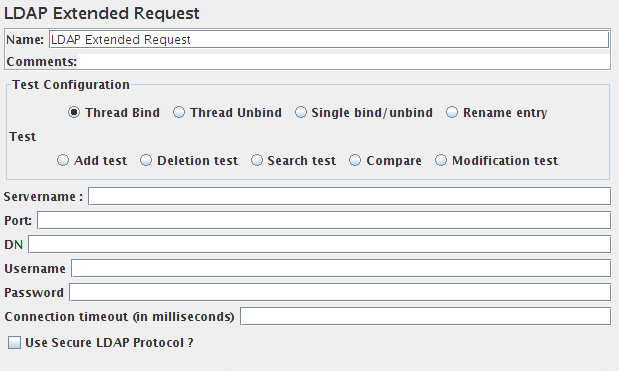

LDAP Extended Request¶

If you are going to send multiple requests to the same LDAP server, consider using an LDAP Extended Request Defaults Configuration Element so you do not have to enter the same information for each LDAP Request.

There are nine test operations defined. These operations are given below:

- Thread bind

-

Any LDAP request is part of an LDAP session, so the first thing that should be done is starting a session to the LDAP server. For starting this session a thread bind is used, which is equal to the LDAP "bind" operation. The user is requested to give a username (Distinguished name) and password, which will be used to initiate a session. When no password, or the wrong password is specified, an anonymous session is started. Take care, omitting the password will not fail this test, a wrong password will. (N.B. this is stored unencrypted in the test plan)

Parameters

AttributeDescriptionRequiredNameDescriptive name for this sampler that is shown in the tree.NoServernameThe name (or IP-address) of the LDAP server.YesPortThe port number that the LDAP server is listening to. If this is omitted JMeter assumes the LDAP server is listening on the default port(389).NoDNThe distinguished name of the base object that will be used for any subsequent operation. It can be used as a starting point for all operations. You cannot start any operation on a higher level than this DN!NoUsernameFull distinguished name of the user as which you want to bind.NoPasswordPassword for the above user. If omitted it will result in an anonymous bind. If it is incorrect, the sampler will return an error and revert to an anonymous bind. (N.B. this is stored unencrypted in the test plan)NoConnection timeout (in milliseconds)Timeout for connection, if exceeded connection will be abortedNoUse Secure LDAP ProtocolUse ldaps:// scheme instead of ldap://NoTrust All CertificatesTrust all certificates, only used if Use Secure LDAP Protocol is checkedNo - Thread unbind

-

This is simply the operation to end a session. It is equal to the LDAP "unbind" operation.

Parameters

AttributeDescriptionRequiredNameDescriptive name for this sampler that is shown in the tree.No - Single bind/unbind

-

This is a combination of the LDAP "bind" and "unbind" operations. It can be used for an authentication request/password check for any user. It will open a new session, just to check the validity of the user/password combination, and end the session again.

Parameters

AttributeDescriptionRequiredNameDescriptive name for this sampler that is shown in the tree.NoUsernameFull distinguished name of the user as which you want to bind.YesPasswordPassword for the above user. If omitted it will result in an anonymous bind. If it is incorrect, the sampler will return an error. (N.B. this is stored unencrypted in the test plan)No - Rename entry

-

This is the LDAP "moddn" operation. It can be used to rename an entry, but also for moving an entry or a complete subtree to a different place in the LDAP tree.

Parameters

AttributeDescriptionRequiredNameDescriptive name for this sampler that is shown in the tree.NoOld entry nameThe current distinguished name of the object you want to rename or move, relative to the given DN in the thread bind operation.YesNew distinguished nameThe new distinguished name of the object you want to rename or move, relative to the given DN in the thread bind operation.Yes - Add test

-

This is the LDAP "add" operation. It can be used to add any kind of object to the LDAP server.

Parameters

AttributeDescriptionRequiredNameDescriptive name for this sampler that is shown in the tree.NoEntry DNDistinguished name of the object you want to add, relative to the given DN in the thread bind operation.YesAdd testA list of attributes and their values you want to use for the object. If you need to add a multiple value attribute, you need to add the same attribute with their respective values several times to the list.Yes - Delete test

-

This is the LDAP "delete" operation, it can be used to delete an object from the LDAP tree

Parameters

AttributeDescriptionRequiredNameDescriptive name for this sampler that is shown in the tree.NoDeleteDistinguished name of the object you want to delete, relative to the given DN in the thread bind operation.Yes - Search test

-

This is the LDAP "search" operation, and will be used for defining searches.

Parameters

AttributeDescriptionRequiredNameDescriptive name for this sampler that is shown in the tree.NoSearch baseDistinguished name of the subtree you want your search to look in, relative to the given DN in the thread bind operation.NoSearch Filtersearchfilter, must be specified in LDAP syntax.YesScopeUse 0 for baseobject-, 1 for onelevel- and 2 for a subtree search. (Default=0)NoSize LimitSpecify the maximum number of results you want back from the server. (default=0, which means no limit.) When the sampler hits the maximum number of results, it will fail with errorcode 4NoTime LimitSpecify the maximum amount of (cpu)time (in milliseconds) that the server can spend on your search. Take care, this does not say anything about the response time. (default is 0, which means no limit)NoAttributesSpecify the attributes you want to have returned, separated by a semicolon. An empty field will return all attributesNoReturn objectWhether the object will be returned (true) or not (false). Default=falseNoDereference aliasesIf true, it will dereference aliases, if false, it will not follow them (default=false)NoParse the search results?If true, the search results will be added to the response data. If false, a marker - whether results where found or not - will be added to the response data.No - Modification test

-

This is the LDAP "modify" operation. It can be used to modify an object. It can be used to add, delete or replace values of an attribute.

Parameters

AttributeDescriptionRequiredNameDescriptive name for this sampler that is shown in the tree.NoEntry nameDistinguished name of the object you want to modify, relative to the given DN in the thread bind operationYesModification testThe attribute-value-opCode triples.

The opCode can be any valid LDAP operationCode (add, delete, remove or replace).

If you don't specify a value with a delete operation, all values of the given attribute will be deleted.

If you do specify a value in a delete operation, only the given value will be deleted.

If this value is non-existent, the sampler will fail the test.Yes - Compare

-

This is the LDAP "compare" operation. It can be used to compare the value of a given attribute with some already known value. In reality this is mostly used to check whether a given person is a member of some group. In such a case you can compare the DN of the user as a given value, with the values in the attribute "member" of an object of the type groupOfNames. If the compare operation fails, this test fails with errorcode 49.

Parameters

AttributeDescriptionRequiredNameDescriptive name for this sampler that is shown in the tree.NoEntry DNThe current distinguished name of the object of which you want to compare an attribute, relative to the given DN in the thread bind operation.YesCompare filterIn the form "attribute=value"Yes

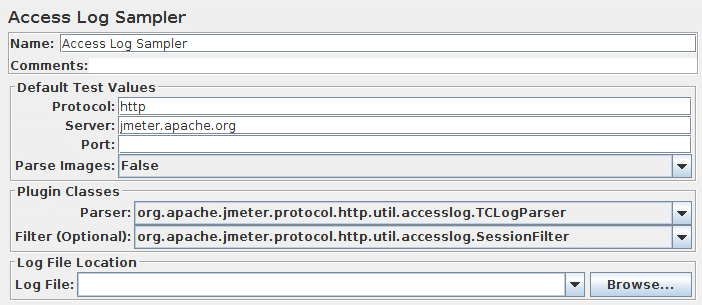

Access Log Sampler¶

AccessLogSampler was designed to read access logs and generate http requests. For those not familiar with the access log, it is the log the webserver maintains of every request it accepted. This means every image, CSS file, JavaScript file, html file, …

Tomcat uses the common format for access logs. This means any webserver that uses the common log format can use the AccessLogSampler. Server that use common log format include: Tomcat, Resin, Weblogic, and SunOne. Common log format looks like this:

127.0.0.1 - - [21/Oct/2003:05:37:21 -0500] "GET /index.jsp?%2Findex.jsp= HTTP/1.1" 200 8343

For the future, it might be nice to filter out entries that do not have a response code of 200. Extending the sampler should be fairly simple. There are two interfaces you have to implement:

- org.apache.jmeter.protocol.http.util.accesslog.LogParser

- org.apache.jmeter.protocol.http.util.accesslog.Generator

The current implementation of AccessLogSampler uses the generator to create a new HTTPSampler. The servername, port and get images are set by AccessLogSampler. Next, the parser is called with integer 1, telling it to parse one entry. After that, HTTPSampler.sample() is called to make the request.

samp = (HTTPSampler) GENERATOR.generateRequest(); samp.setDomain(this.getDomain()); samp.setPort(this.getPort()); samp.setImageParser(this.isImageParser()); PARSER.parse(1); res = samp.sample(); res.setSampleLabel(samp.toString());The required methods in LogParser are:

- setGenerator(Generator)

- parse(int)

Classes implementing Generator interface should provide concrete implementation for all the methods. For an example of how to implement either interface, refer to StandardGenerator and TCLogParser.

(Beta Code)

Parameters ¶

The TCLogParser processes the access log independently for each thread. The SharedTCLogParser and OrderPreservingLogParser share access to the file, i.e. each thread gets the next entry in the log.

The SessionFilter is intended to handle Cookies across threads. It does not filter out any entries, but modifies the cookie manager so that the cookies for a given IP are processed by a single thread at a time. If two threads try to process samples from the same client IP address, then one will be forced to wait until the other has completed.

The LogFilter is intended to allow access log entries to be filtered by filename and regex, as well as allowing for the replacement of file extensions. However, it is not currently possible to configure this via the GUI, so it cannot really be used.

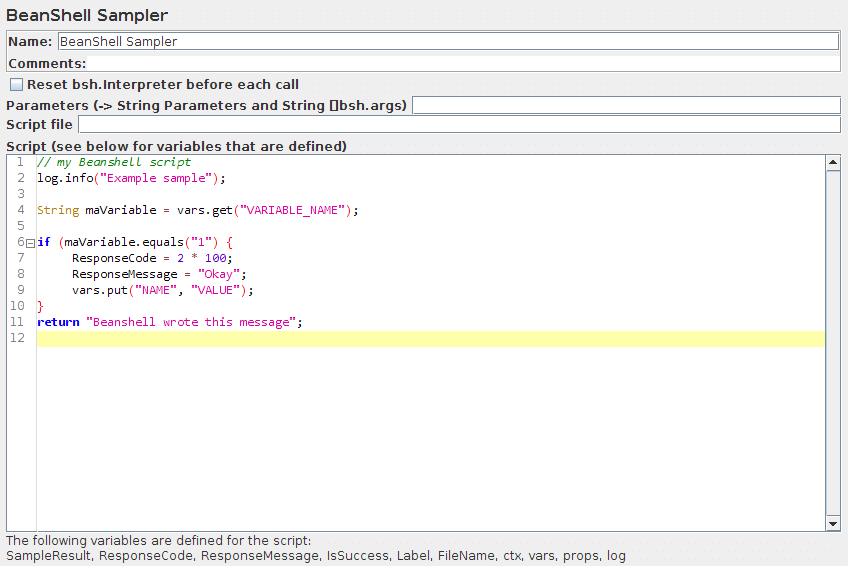

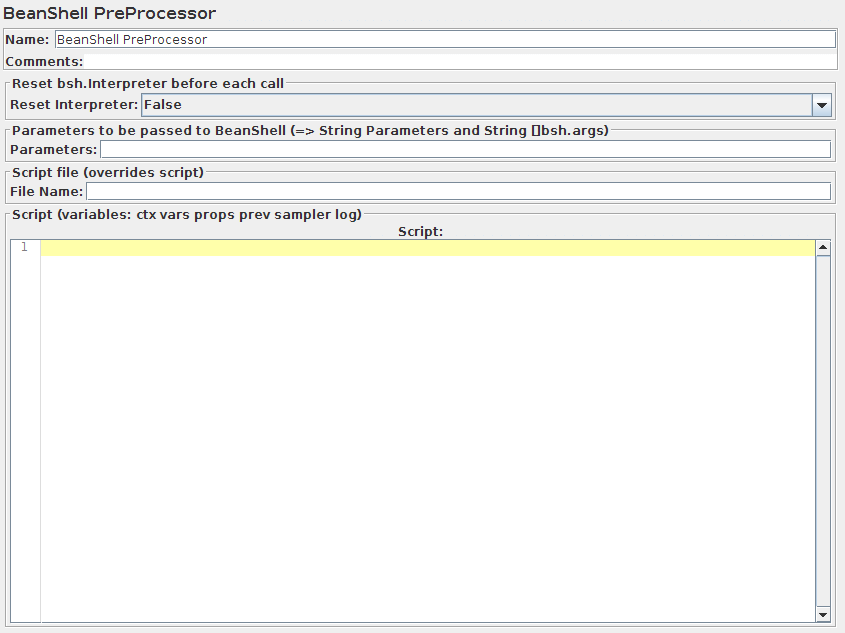

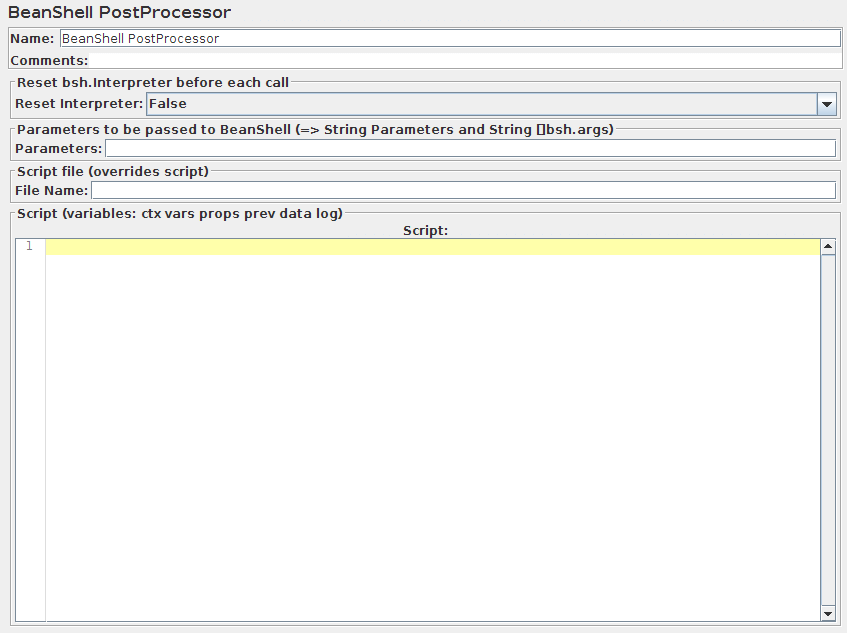

BeanShell Sampler¶

This sampler allows you to write a sampler using the BeanShell scripting language.

For full details on using BeanShell, please see the BeanShell website.

The test element supports the ThreadListener and TestListener interface methods. These must be defined in the initialisation file. See the file BeanShellListeners.bshrc for example definitions.

The BeanShell sampler also supports the Interruptible interface. The interrupt() method can be defined in the script or the init file.

Parameters ¶

- Parameters

- string containing the parameters as a single variable

- bsh.args

- String array containing parameters, split on white-space

If the property "beanshell.sampler.init" is defined, it is passed to the Interpreter as the name of a sourced file. This can be used to define common methods and variables. There is a sample init file in the bin directory: BeanShellSampler.bshrc.

If a script file is supplied, that will be used, otherwise the script will be used.

BeanShell does not currently support Java 5 syntax such as generics and the enhanced for loop.

Before invoking the script, some variables are set up in the BeanShell interpreter:

The contents of the Parameters field is put into the variable "Parameters". The string is also split into separate tokens using a single space as the separator, and the resulting list is stored in the String array bsh.args.

The full list of BeanShell variables that is set up is as follows:

- log - the Logger

- Label - the Sampler label

- FileName - the file name, if any

- Parameters - text from the Parameters field

- bsh.args - the parameters, split as described above

- SampleResult - pointer to the current SampleResult

- ResponseCode defaults to 200

- ResponseMessage defaults to "OK"

- IsSuccess defaults to true

- ctx - JMeterContext

-

vars - JMeterVariables - e.g.

vars.get("VAR1"); vars.put("VAR2","value"); vars.remove("VAR3"); vars.putObject("OBJ1",new Object()); -

props - JMeterProperties (class java.util.Properties) - e.g.

props.get("START.HMS"); props.put("PROP1","1234");

When the script completes, control is returned to the Sampler, and it copies the contents of the following script variables into the corresponding variables in the SampleResult:

- ResponseCode - for example 200

- ResponseMessage - for example "OK"

- IsSuccess - true or false

The SampleResult ResponseData is set from the return value of the script. If the script returns null, it can set the response directly, by using the method SampleResult.setResponseData(data), where data is either a String or a byte array. The data type defaults to "text", but can be set to binary by using the method SampleResult.setDataType(SampleResult.BINARY).

The SampleResult variable gives the script full access to all the fields and methods in the SampleResult. For example, the script has access to the methods setStopThread(boolean) and setStopTest(boolean). Here is a simple (not very useful!) example script:

if (bsh.args[0].equalsIgnoreCase("StopThread")) {

log.info("Stop Thread detected!");

SampleResult.setStopThread(true);

}

return "Data from sample with Label "+Label;

//or

SampleResult.setResponseData("My data");

return null;

Another example:

ensure that the property beanshell.sampler.init=BeanShellSampler.bshrc is defined in jmeter.properties.

The following script will show the values of all the variables in the ResponseData field:

return getVariables();

For details on the methods available for the various classes (JMeterVariables, SampleResult etc.) please check the Javadoc or the source code. Beware however that misuse of any methods can cause subtle faults that may be difficult to find.

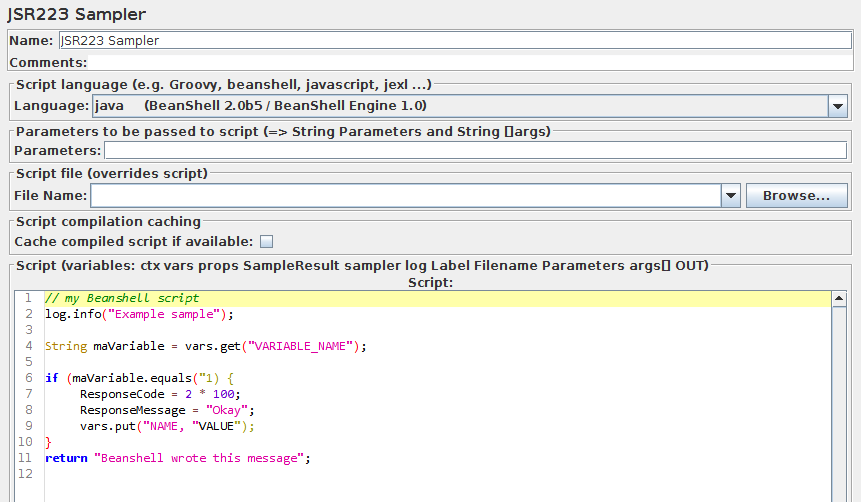

JSR223 Sampler¶

The JSR223 Sampler allows JSR223 script code to be used to perform a sample or some computation required to create/update variables.

SampleResult.setIgnore();This call will have the following impact:

- SampleResult will not be delivered to SampleListeners like View Results Tree, Summariser ...

- SampleResult will not be evaluated in Assertions nor PostProcessors

- SampleResult will be evaluated to computing last sample status (${JMeterThread.last_sample_ok}), and ThreadGroup "Action to be taken after a Sampler error" (since JMeter 5.4)

The JSR223 test elements have a feature (compilation) that can significantly increase performance. To benefit from this feature:

- Use Script files instead of inlining them. This will make JMeter compile them if this feature is available on ScriptEngine and cache them.

-

Or Use Script Text and check Cache compiled script if available property.

When using this feature, ensure your script code does not use JMeter variables or JMeter function calls directly in script code as caching would only cache first replacement. Instead use script parameters.To benefit from caching and compilation, the language engine used for scripting must implement JSR223 Compilable interface (Groovy is one of these, java, beanshell and javascript are not)When using Groovy as scripting language and not checking Cache compiled script if available (while caching is recommended), you should set this JVM Property -Dgroovy.use.classvalue=true due to a Groovy Memory leak as of version 2.4.6, see:

jsr223.compiled_scripts_cache_size=100

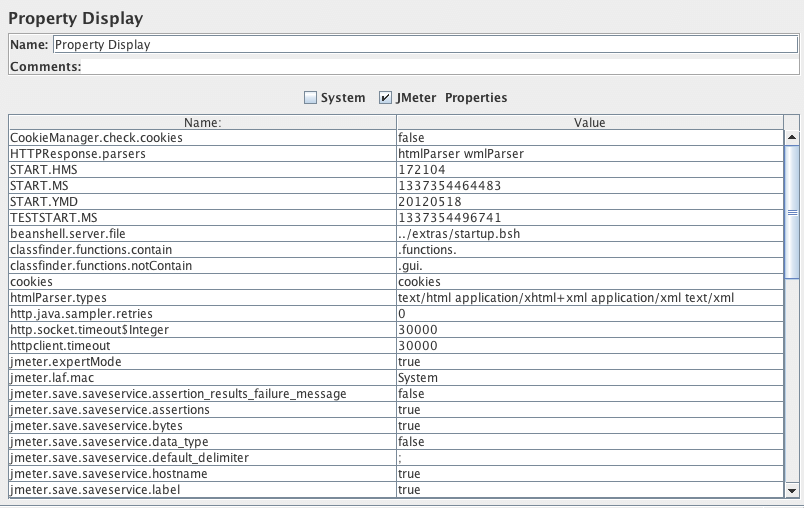

props.get("START.HMS");

props.put("PROP1","1234");

Parameters ¶

Notice that some languages such as Velocity may use a different syntax for JSR223 variables, e.g.

$log.debug("Hello " + $vars.get("a"));

for Velocity.

If a script file is supplied, that will be used, otherwise the script will be used.

Before invoking the script, some variables are set up. Note that these are JSR223 variables - i.e. they can be used directly in the script.

- log - the Logger

- Label - the Sampler label

- FileName - the file name, if any

- Parameters - text from the Parameters field

- args - the parameters, split as described above

- SampleResult - pointer to the current SampleResult

- sampler - (Sampler) - pointer to current Sampler

- ctx - JMeterContext

-

vars - JMeterVariables - e.g.

vars.get("VAR1"); vars.put("VAR2","value"); vars.remove("VAR3"); vars.putObject("OBJ1",new Object()); -

props - JMeterProperties (class java.util.Properties) - e.g.

props.get("START.HMS"); props.put("PROP1","1234"); - OUT - System.out - e.g. OUT.println("message")

The SampleResult ResponseData is set from the return value of the script. If the script returns null, it can set the response directly, by using the method SampleResult.setResponseData(data), where data is either a String or a byte array. The data type defaults to "text", but can be set to binary by using the method SampleResult.setDataType(SampleResult.BINARY).

The SampleResult variable gives the script full access to all the fields and methods in the SampleResult. For example, the script has access to the methods setStopThread(boolean) and setStopTest(boolean).

Unlike the BeanShell Sampler, the JSR223 Sampler does not set the ResponseCode, ResponseMessage and sample status via script variables. Currently the only way to changes these is via the SampleResult methods:

- SampleResult.setSuccessful(true/false)

- SampleResult.setResponseCode("code")

- SampleResult.setResponseMessage("message")

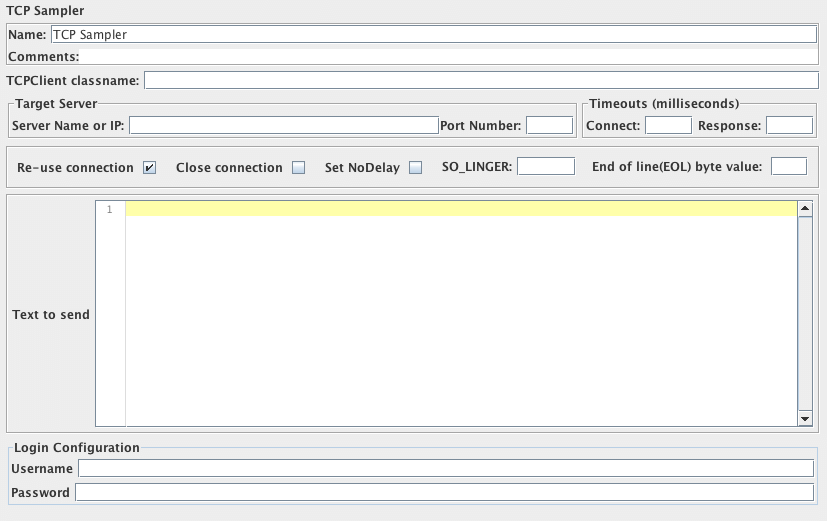

TCP Sampler¶

The TCP Sampler opens a TCP/IP connection to the specified server. It then sends the text, and waits for a response.

If "Re-use connection" is selected, connections are shared between Samplers in the same thread, provided that the exact same host name string and port are used. Different hosts/port combinations will use different connections, as will different threads. If both of "Re-use connection" and "Close connection" are selected, the socket will be closed after running the sampler. On the next sampler, another socket will be created. You may want to close a socket at the end of each thread loop.

If an error is detected - or "Re-use connection" is not selected - the socket is closed. Another socket will be reopened on the next sample.

The following properties can be used to control its operation:

- tcp.status.prefix

- text that precedes a status number

- tcp.status.suffix

- text that follows a status number

- tcp.status.properties

- name of property file to convert status codes to messages

- tcp.handler

- Name of TCP Handler class (default TCPClientImpl) - only used if not specified on the GUI

Users can provide their own implementation. The class must extend org.apache.jmeter.protocol.tcp.sampler.TCPClient.

The following implementations are currently provided.

- TCPClientImpl

- BinaryTCPClientImpl

- LengthPrefixedBinaryTCPClientImpl

- TCPClientImpl

- This implementation is fairly basic. When reading the response, it reads until the end of line byte, if this is defined by setting the property tcp.eolByte, otherwise until the end of the input stream. You can control charset encoding by setting tcp.charset, which will default to Platform default encoding.

- BinaryTCPClientImpl

- This implementation converts the GUI input, which must be a hex-encoded string, into binary, and performs the reverse when reading the response. When reading the response, it reads until the end of message byte, if this is defined by setting the property tcp.BinaryTCPClient.eomByte, otherwise until the end of the input stream.

- LengthPrefixedBinaryTCPClientImpl

- This implementation extends BinaryTCPClientImpl by prefixing the binary message data with a binary length byte. The length prefix defaults to 2 bytes. This can be changed by setting the property tcp.binarylength.prefix.length.

- Timeout handling

- If the timeout is set, the read will be terminated when this expires. So if you are using an eolByte/eomByte, make sure the timeout is sufficiently long, otherwise the read will be terminated early.

- Response handling

-

If tcp.status.prefix is defined, then the response message is searched for the text following

that up to the suffix. If any such text is found, it is used to set the response code.

The response message is then fetched from the properties file (if provided).

Response codes in the range "400"-"499" and "500"-"599" are currently regarded as failures; all others are successful. [This needs to be made configurable!]Usage of pre- and suffix¶For example, if the prefix = "[" and the suffix = "]", then the following response:

[J28] XI123,23,GBP,CR

would have the response code J28.

Sockets are disconnected at the end of a test run.

Parameters ¶

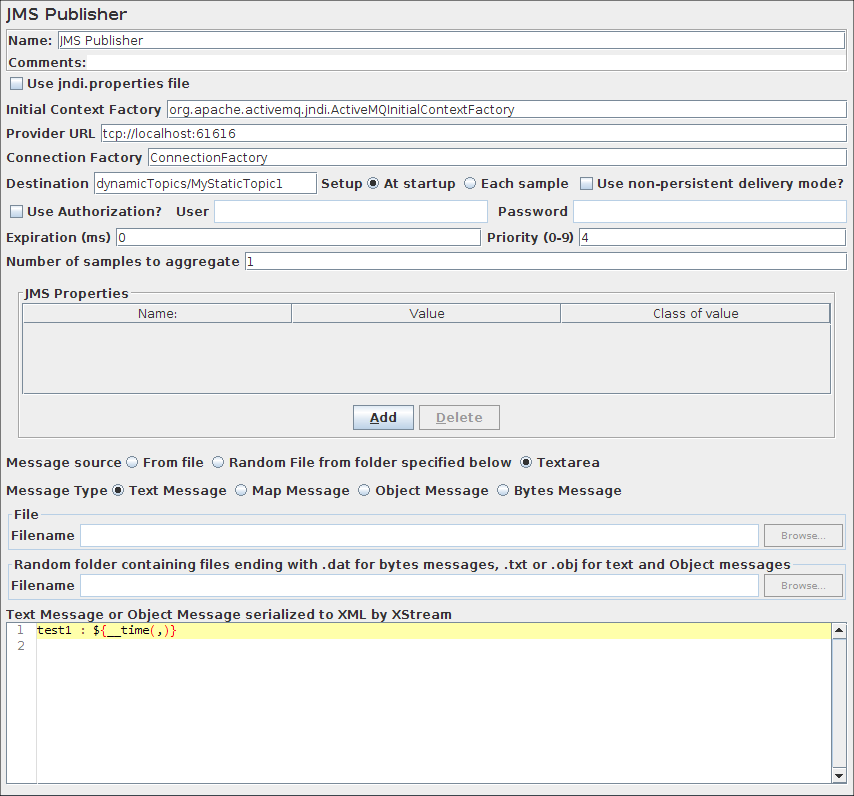

JMS Publisher¶

JMS Publisher will publish messages to a given destination (topic/queue). For those not familiar with JMS, it is the J2EE specification for messaging. There are numerous JMS servers on the market and several open source options.

Parameters ¶

- From File

- means the referenced file will be read and reused by all samples. If file name changes it is reloaded since JMeter 3.0

- Random File from folder specified below

- means a random file will be selected from folder specified below, this folder must contain either files with extension .dat for Bytes Messages, or files with extension .txt or .obj for Object or Text messages

- Text area

- The Message to use either for Text or Object message

- RAW:

- No variable support from the file and load it with default system charset.

- DEFAULT:

- Load file with default system encoding, except for XML which relies on XML prolog. If the file contain variables, they will be processed.

- Standard charsets:

- The specified encoding (valid or not) is used for reading the file and processing variables

For the MapMessage type, JMeter reads the source as lines of text. Each line must have 3 fields, delimited by commas. The fields are:

- Name of entry

- Object class name, e.g. "String" (assumes java.lang package if not specified)

- Object string value

name,String,Example size,Integer,1234

- Put the JAR that contains your object and its dependencies in jmeter_home/lib/ folder

- Serialize your object as XML using XStream

- Either put result in a file suffixed with .txt or .obj or put XML content directly in Text Area

The following table shows some values which may be useful when configuring JMS:

| Apache ActiveMQ | Value(s) | Comment |

|---|---|---|

| Context Factory | org.apache.activemq.jndi.ActiveMQInitialContextFactory | . |

| Provider URL | vm://localhost | |

| Provider URL | vm:(broker:(vm://localhost)?persistent=false) | Disable persistence |

| Queue Reference | dynamicQueues/QUEUENAME | Dynamically define the QUEUENAME to JNDI |

| Topic Reference | dynamicTopics/TOPICNAME | Dynamically define the TOPICNAME to JNDI |

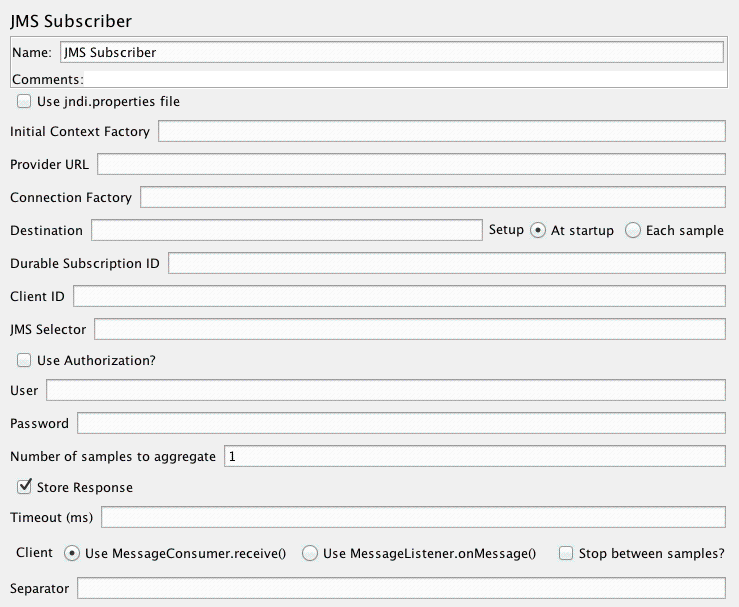

JMS Subscriber¶

JMS Subscriber will subscribe to messages in a given destination (topic or queue). For those not familiar with JMS, it is the J2EE specification for messaging. There are numerous JMS servers on the market and several open source options.

Parameters ¶

- MessageConsumer.receive()

- calls receive() for every requested message. Retains the connection between samples, but does not fetch messages unless the sampler is active. This is best suited to Queue subscriptions.

- MessageListener.onMessage()

- establishes a Listener that stores all incoming messages on a queue. The listener remains active after the sampler completes. This is best suited to Topic subscriptions.

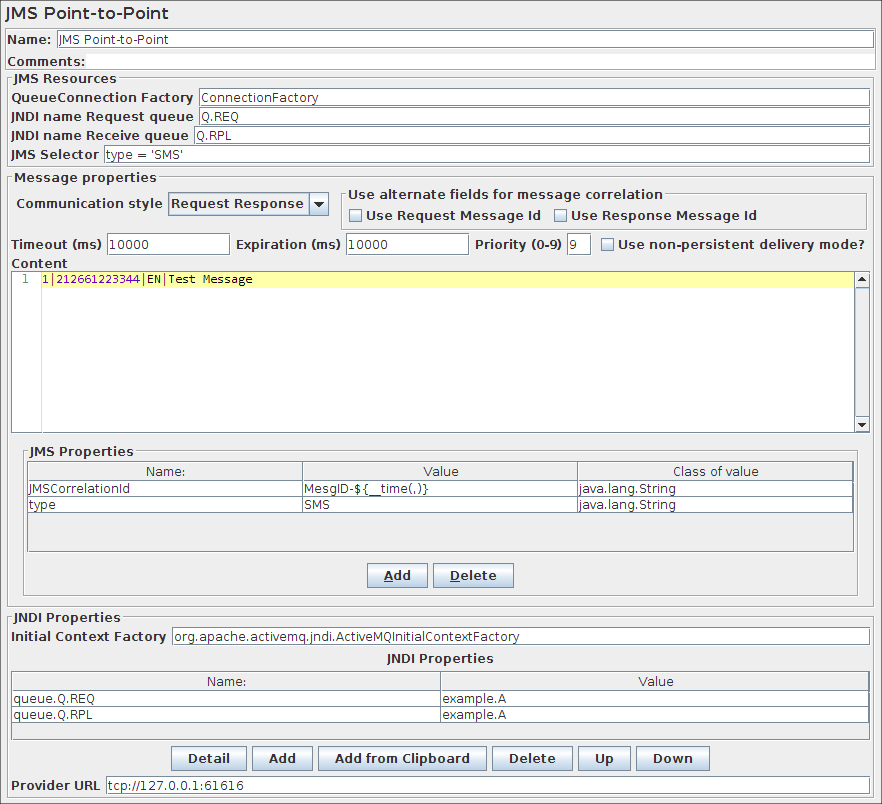

JMS Point-to-Point¶

This sampler sends and optionally receives JMS Messages through point-to-point connections (queues). It is different from pub/sub messages and is generally used for handling transactions.

request_only will typically be used to put load on a JMS System.

request_reply will be used when you want to test response time of a JMS service that processes messages sent to the Request Queue as this mode will wait for the response on the Reply queue sent by this service.

browse returns the current queue depth, i.e. the number of messages on the queue.

read reads a message from the queue (if any).

clear clears the queue, i.e. remove all messages from the queue.

JMeter use the properties java.naming.security.[principal|credentials] - if present - when creating the Queue Connection. If this behaviour is not desired, set the JMeter property JMSSampler.useSecurity.properties=false

Parameters ¶

- Request Only

- will only send messages and will not monitor replies. As such it can be used to put load on a system.

- Request Response

- will send messages and monitor the replies it receives. Behaviour depends on the value of the JNDI Name Reply Queue. If JNDI Name Reply Queue has a value, this queue is used to monitor the results. Matching of request and reply is done with the message id of the request and the correlation id of the reply. If the JNDI Name Reply Queue is empty, then temporary queues will be used for the communication between the requestor and the server. This is very different from the fixed reply queue. With temporary queues the sending thread will block until the reply message has been received. With Request Response mode, you need to have a Server that listens to messages sent to Request Queue and sends replies to queue referenced by message.getJMSReplyTo().

- Read

- will read a message from an outgoing queue which has no listeners attached. This can be convenient for testing purposes. This method can be used if you need to handle queues without a binding file (in case the jmeter-jms-skip-jndi library is used), which only works with the JMS Point-to-Point sampler. In case binding files are used, one can also use the JMS Subscriber Sampler for reading from a queue.

- Browse

- will determine the current queue depth without removing messages from the queue, returning the number of messages on the queue.

- Clear

- will clear the queue, i.e. remove all messages from the queue.

- Use Request Message Id

- if selected, the request JMSMessageID will be used, otherwise the request JMSCorrelationID will be used. In the latter case the correlation id must be specified in the request.

- Use Response Message Id

- if selected, the response JMSMessageID will be used, otherwise the response JMSCorrelationID will be used.

- JMS Correlation ID Pattern

- i.e. match request and response on their correlation Ids => deselect both checkboxes, and provide a correlation id.

- JMS Message ID Pattern

- i.e. match request message id with response correlation id => select "Use Request Message Id" only.

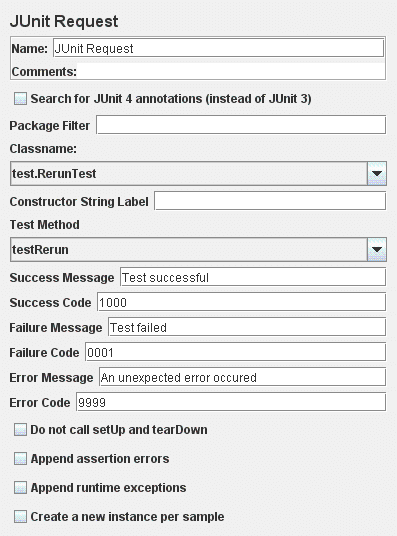

JUnit Request¶

- rather than use JMeter's test interface, it scans the jar files for classes extending JUnit's TestCase class. That includes any class or subclass.

- JUnit test jar files should be placed in jmeter/lib/junit instead of /lib directory. You can also use the "user.classpath" property to specify where to look for TestCase classes.

- JUnit sampler does not use name/value pairs for configuration like the Java Request. The sampler assumes setUp and tearDown will configure the test correctly.

- The sampler measures the elapsed time only for the test method and does not include setUp and tearDown.

- Each time the test method is called, JMeter will pass the result to the listeners.

- Support for oneTimeSetUp and oneTimeTearDown is done as a method. Since JMeter is multi-threaded, we cannot call oneTimeSetUp/oneTimeTearDown the same way Maven does it.

- The sampler reports unexpected exceptions as errors. There are some important differences between standard JUnit test runners and JMeter's implementation. Rather than make a new instance of the class for each test, JMeter creates 1 instance per sampler and reuses it. This can be changed with checkbox "Create a new instance per sample".

public class myTestCase {

public myTestCase() {}

}

String Constructor:

public class myTestCase {

public myTestCase(String text) {

super(text);

}

}

General Guidelines

If you use setUp and tearDown, make sure the methods are declared public. If you do not, the test may not run properly.Here are some general guidelines for writing JUnit tests so they work well with JMeter. Since JMeter runs multi-threaded, it is important to keep certain things in mind.

- Write the setUp and tearDown methods so they are thread safe. This generally means avoid using static members.

- Make the test methods discrete units of work and not long sequences of actions. By keeping the test method to a discrete operation, it makes it easier to combine test methods to create new test plans.

- Avoid making test methods depend on each other. Since JMeter allows arbitrary sequencing of test methods, the runtime behavior is different than the default JUnit behavior.

- If a test method is configurable, be careful about where the properties are stored. Reading the properties from the Jar file is recommended.

- Each sampler creates an instance of the test class, so write your test so the setup happens in oneTimeSetUp and oneTimeTearDown.

Parameters ¶

The following JUnit4 annotations are recognised:

- @Test

- used to find test methods and classes. The "expected" and "timeout" attributes are supported.

- @Before

- treated the same as setUp() in JUnit3

- @After

- treated the same as tearDown() in JUnit3

- @BeforeClass, @AfterClass

- treated as test methods so they can be run independently as required

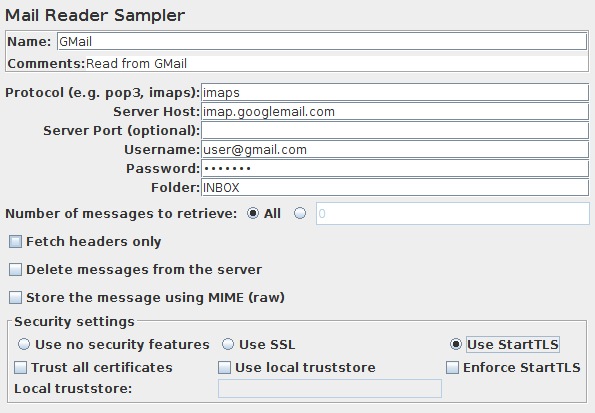

Mail Reader Sampler¶

The Mail Reader Sampler can read (and optionally delete) mail messages using POP3(S) or IMAP(S) protocols.

Parameters ¶

Failing that, against the directory containing the test script (JMX file).

Messages are stored as subsamples of the main sampler. Multipart message parts are stored as subsamples of the message.

Special handling for "file" protocol:

The file JavaMail provider can be used to read raw messages from files.

The server field is used to specify the path to the parent of the folder.

Individual message files should be stored with the name n.msg,

where n is the message number.

Alternatively, the server field can be the name of a file which contains a single message.

The current implementation is quite basic, and is mainly intended for debugging purposes.

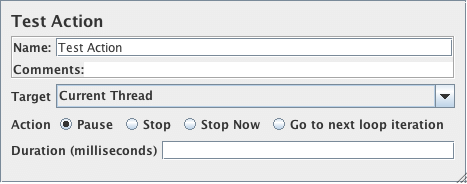

Flow Control Action (was: Test Action ) ¶

This sampler can also be useful in conjunction with the Transaction Controller, as it allows pauses to be included without needing to generate a sample. For variable delays, set the pause time to zero, and add a Timer as a child.

The "Stop" action stops the thread or test after completing any samples that are in progress. The "Stop Now" action stops the test without waiting for samples to complete; it will interrupt any active samples. If some threads fail to stop within the 5 second time-limit, a message will be displayed in GUI mode. You can try using the Stop command to see if this will stop the threads, but if not, you should exit JMeter. In CLI mode, JMeter will exit if some threads fail to stop within the 5 second time limit.

Parameters ¶

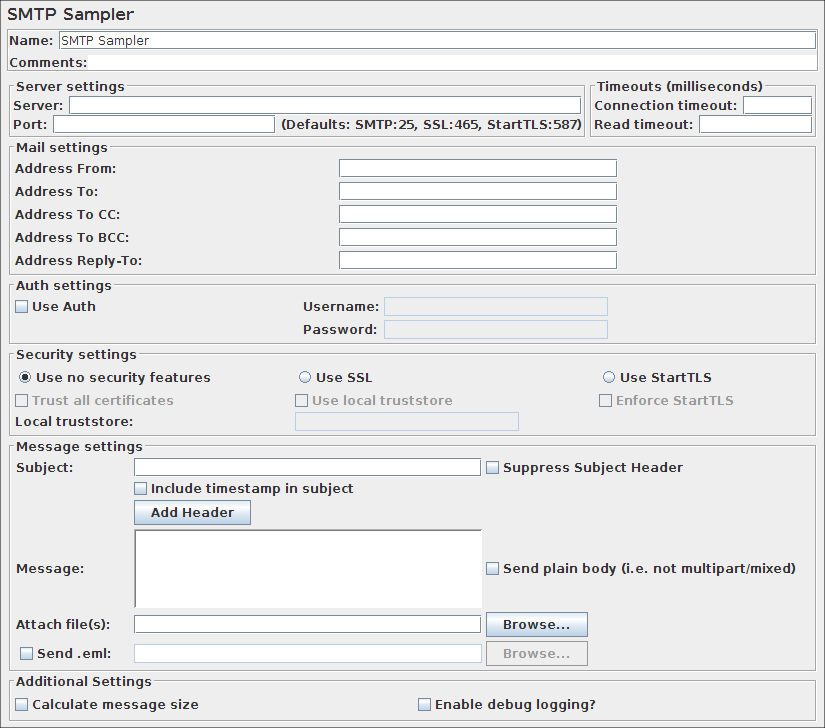

SMTP Sampler¶

The SMTP Sampler can send mail messages using SMTP/SMTPS protocol.

It is possible to set security protocols for the connection (SSL and TLS), as well as user authentication.

If a security protocol is used a verification on the server certificate will occur.

Two alternatives to handle this verification are available:

- Trust all certificates

- This will ignore certificate chain verification

- Use a local truststore

- With this option the certificate chain will be validated against the local truststore file.

Parameters ¶

Failing that, against the directory containing the test script (JMX file).

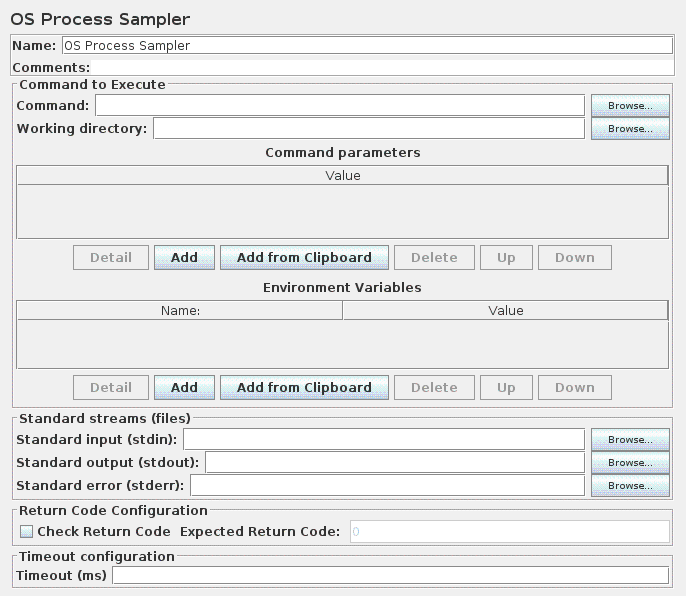

OS Process Sampler¶

The OS Process Sampler is a sampler that can be used to execute commands on the local machine.

It should allow execution of any command that can be run from the command line.

Validation of the return code can be enabled, and the expected return code can be specified.

Note that OS shells generally provide command-line parsing. This varies between OSes, but generally the shell will split parameters on white-space. Some shells expand wild-card file names; some don't. The quoting mechanism also varies between OSes. The sampler deliberately does not do any parsing or quote handling. The command and its parameters must be provided in the form expected by the executable. This means that the sampler settings will not be portable between OSes.

Many OSes have some built-in commands which are not provided as separate executables. For example the Windows DIR command is part of the command interpreter (CMD.EXE). These built-ins cannot be run as independent programs, but have to be provided as arguments to the appropriate command interpreter.

For example, the Windows command-line: DIR C:\TEMP needs to be specified as follows:

- Command:

- CMD

- Param 1:

- /C

- Param 2:

- DIR

- Param 3:

- C:\TEMP

Parameters ¶

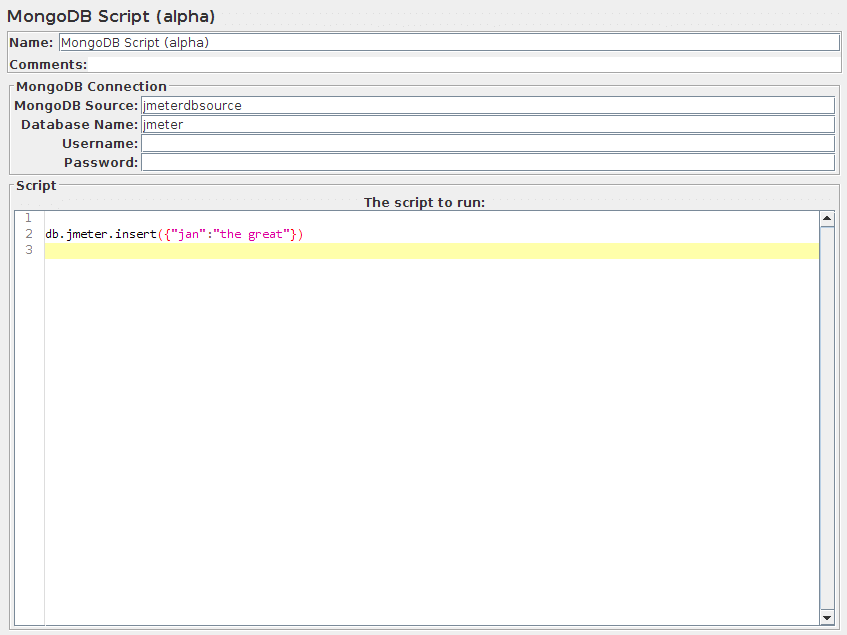

MongoDB Script (DEPRECATED)¶

This sampler lets you send a Request to a MongoDB.

Before using this you need to set up a MongoDB Source Config Configuration element

Parameters ¶

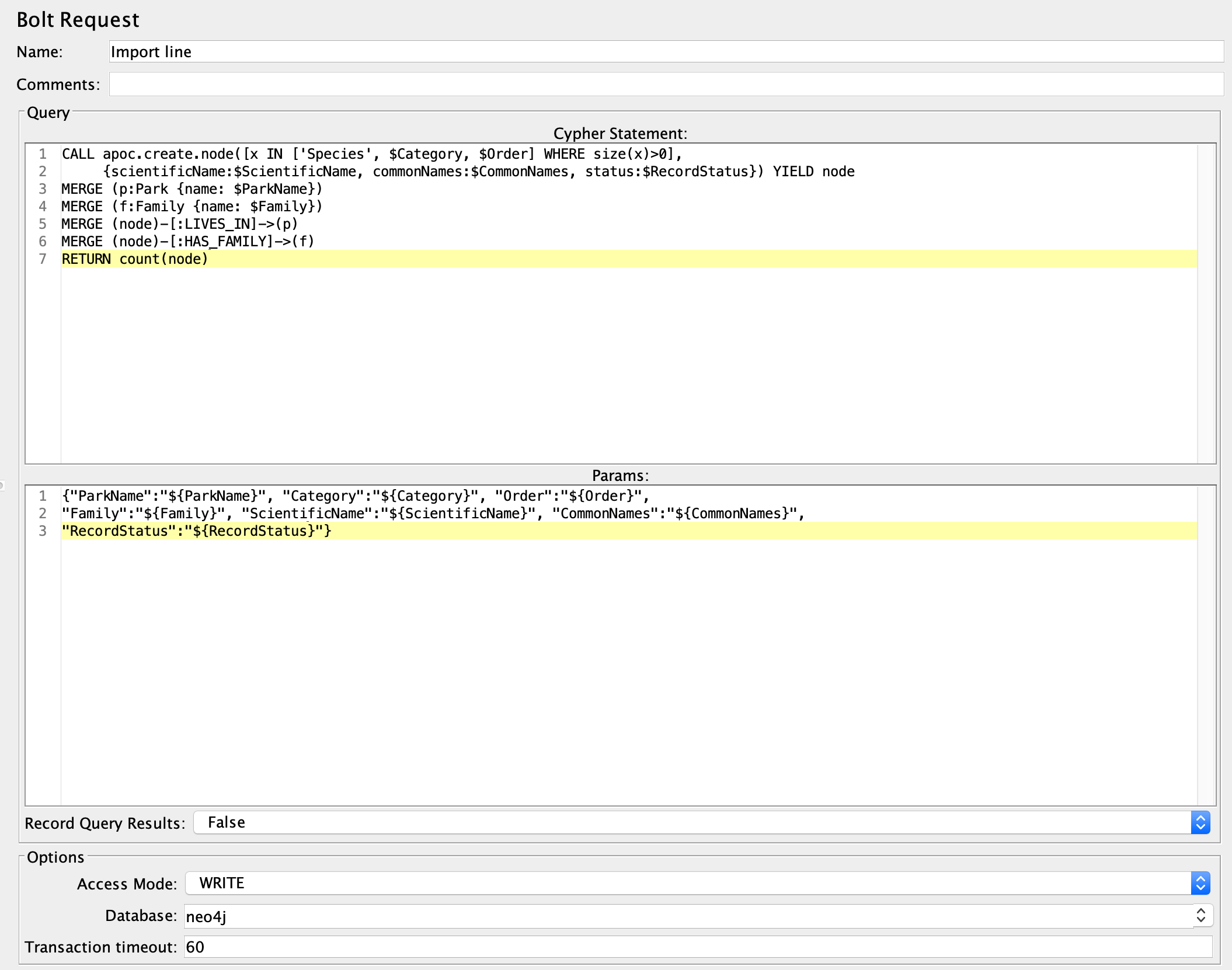

Bolt Request¶

This sampler allows you to run Cypher queries through the Bolt protocol.

Before using this you need to set up a Bolt Connection Configuration

Every request uses a connection acquired from the pool and returns it to the pool when the sampler completes. The connection pool size defaults to 100 and is configurable.

The measured response time corresponds to the "full" query execution, including both the time to execute the cypher query AND the time to consume the results sent back by the database.

Parameters ¶

18.2 Logic Controllers¶

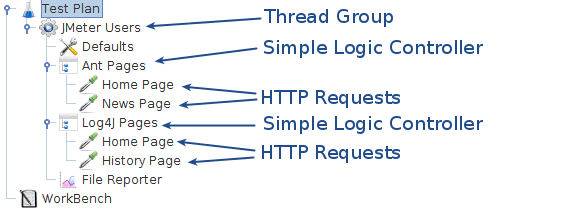

Logic Controllers determine the order in which Samplers are processed.

Simple Controller¶

The Simple Logic Controller lets you organize your Samplers and other Logic Controllers. Unlike other Logic Controllers, this controller provides no functionality beyond that of a storage device.

Parameters ¶

Download this example (see Figure 6). In this example, we created a Test Plan that sends two Ant HTTP requests and two Log4J HTTP requests. We grouped the Ant and Log4J requests by placing them inside Simple Logic Controllers. Remember, the Simple Logic Controller has no effect on how JMeter processes the controller(s) you add to it. So, in this example, JMeter sends the requests in the following order: Ant Home Page, Ant News Page, Log4J Home Page, Log4J History Page.

Note, the File Reporter is configured to store the results in a file named "simple-test.dat" in the current directory.

Loop Controller¶

If you add Generative or Logic Controllers to a Loop Controller, JMeter will loop through them a certain number of times, in addition to the loop value you specified for the Thread Group. For example, if you add one HTTP Request to a Loop Controller with a loop count of two, and configure the Thread Group loop count to three, JMeter will send a total of 2 * 3 = 6 HTTP Requests.

Parameters ¶

The value -1 is equivalent to checking the Forever toggle.

Special Case: The Loop Controller embedded in the Thread Group element behaves slightly different. Unless set to forever, it stops the test after the given number of iterations have been done.

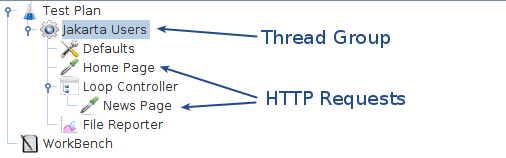

Download this example (see Figure 4). In this example, we created a Test Plan that sends a particular HTTP Request only once and sends another HTTP Request five times.

We configured the Thread Group for a single thread and a loop count value of one. Instead of letting the Thread Group control the looping, we used a Loop Controller. You can see that we added one HTTP Request to the Thread Group and another HTTP Request to a Loop Controller. We configured the Loop Controller with a loop count value of five.

JMeter will send the requests in the following order: Home Page, News Page, News Page, News Page, News Page, and News Page.

Once Only Controller¶

The Once Only Logic Controller tells JMeter to process the controller(s) inside it only once per Thread, and pass over any requests under it during further iterations through the test plan.

The Once Only Controller will now execute always during the first iteration of any looping parent controller. Thus, if the Once Only Controller is placed under a Loop Controller specified to loop 5 times, then the Once Only Controller will execute only on the first iteration through the Loop Controller (i.e. every 5 times).

Note this means the Once Only Controller will still behave as previously expected if put under a Thread Group (runs only once per test per Thread), but now the user has more flexibility in the use of the Once Only Controller.

For testing that requires a login, consider placing the login request in this controller since each thread only needs to login once to establish a session.

Parameters ¶

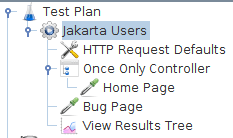

Download this example (see Figure 5). In this example, we created a Test Plan that has two threads that send HTTP request. Each thread sends one request to the Home Page, followed by three requests to the Bug Page. Although we configured the Thread Group to iterate three times, each JMeter thread only sends one request to the Home Page because this request lives inside a Once Only Controller.

Each JMeter thread will send the requests in the following order: Home Page, Bug Page, Bug Page, Bug Page.

Note, the File Reporter is configured to store the results in a file named "loop-test.dat" in the current directory.

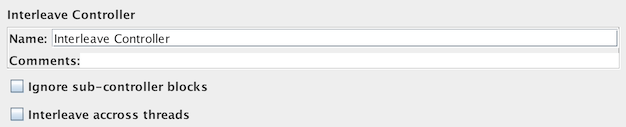

Interleave Controller¶

If you add Generative or Logic Controllers to an Interleave Controller, JMeter will alternate among each of the other controllers for each loop iteration.

Parameters ¶

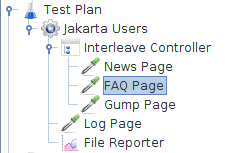

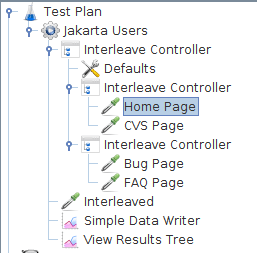

Download this example (see Figure 1). In this example, we configured the Thread Group to have two threads and a loop count of five, for a total of ten requests per thread. See the table below for the sequence JMeter sends the HTTP Requests.

| Loop Iteration | Each JMeter Thread Sends These HTTP Requests |

|---|---|

| 1 | News Page |

| 1 | Log Page |

| 2 | FAQ Page |

| 2 | Log Page |

| 3 | Gump Page |

| 3 | Log Page |

| 4 | Because there are no more requests in the controller,

JMeter starts over and sends the first HTTP Request, which is the News Page. |

| 4 | Log Page |

| 5 | FAQ Page |

| 5 | Log Page |

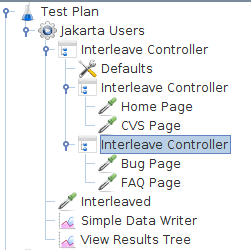

Download another example (see Figure 2). In this example, we configured the Thread Group to have a single thread and a loop count of eight. Notice that the Test Plan has an outer Interleave Controller with two Interleave Controllers inside of it.

The outer Interleave Controller alternates between the two inner ones. Then, each inner Interleave Controller alternates between each of the HTTP Requests. Each JMeter thread will send the requests in the following order: Home Page, Interleaved, Bug Page, Interleaved, CVS Page, Interleaved, and FAQ Page, Interleaved.

Note, the File Reporter is configured to store the results in a file named "interleave-test2.dat" in the current directory.

If the two interleave controllers under the main interleave controller were instead simple controllers, then the order would be: Home Page, CVS Page, Interleaved, Bug Page, FAQ Page, Interleaved.

However, if "ignore sub-controller blocks" was checked on the main interleave controller, then the order would be: Home Page, Interleaved, Bug Page, Interleaved, CVS Page, Interleaved, and FAQ Page, Interleaved.

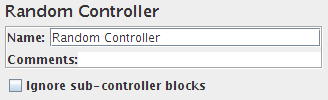

Random Controller¶

The Random Logic Controller acts similarly to the Interleave Controller, except that instead of going in order through its sub-controllers and samplers, it picks one at random at each pass.

Parameters ¶

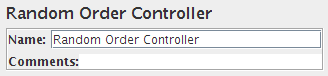

Random Order Controller¶

The Random Order Controller is much like a Simple Controller in that it will execute each child element at most once, but the order of execution of the nodes will be random.

Parameters ¶

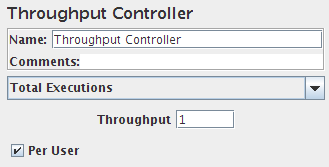

Throughput Controller¶

The Throughput Controller allows the user to control how often it is executed. There are two modes:

- percent execution

- total executions

- Percent executions

- causes the controller to execute a certain percentage of the iterations through the test plan.

- Total executions

- causes the controller to stop executing after a certain number of executions have occurred.

Parameters ¶

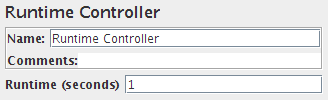

Runtime Controller¶

The Runtime Controller controls how long its children will run. Controller will run its children until configured Runtime(s) is exceeded.

Parameters ¶

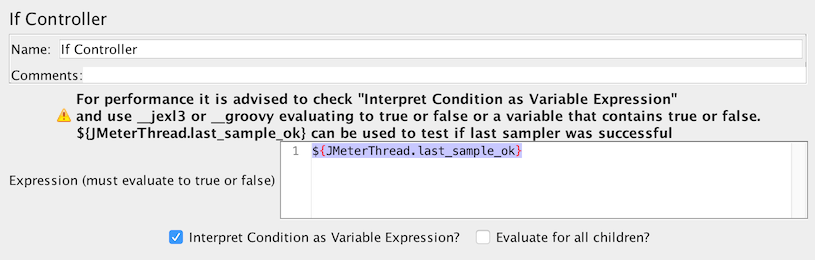

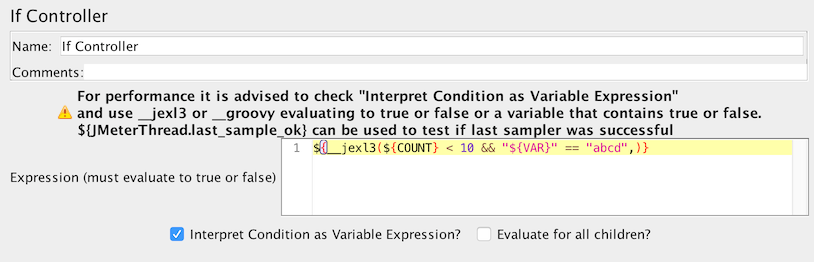

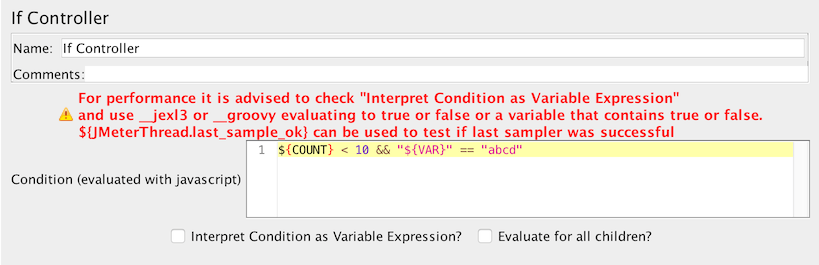

If Controller¶

The If Controller allows the user to control whether the test elements below it (its children) are run or not.

By default, the condition is evaluated only once on initial entry, but you have the option to have it evaluated for every runnable element contained in the controller.

The best option (default one) is to check Interpret Condition as Variable Expression?, then in the condition field you have 2 options:

- Option 1: Use a variable that contains true or false

-

Option 2: Use a function (${__jexl3()} is advised) to evaluate an expression that must return true or false

If Controller using expression

"${myVar}" == "\${myVar}"

Or use:

"${myVar}" != "\${myVar}"

to test if a variable is defined and is not null.

Parameters ¶

- ${COUNT} < 10

- "${VAR}" == "abcd"

When using __groovy take care to not use variable replacement in the string, otherwise if using a variable that changes the script cannot be cached. Instead get the variable using: vars.get("myVar"). See the Groovy examples below.

- ${__groovy(vars.get("myVar") != "Invalid" )} (Groovy check myVar is not equal to Invalid)

- ${__groovy(vars.get("myInt").toInteger() <=4 )} (Groovy check myInt is less then or equal to 4)

- ${__groovy(vars.get("myMissing") != null )} (Groovy check if the myMissing variable is not set)

- ${__jexl3(${COUNT} < 10)}

- ${RESULT}

- ${JMeterThread.last_sample_ok} (check if the last sample succeeded)

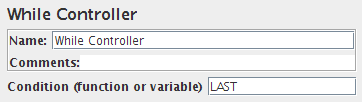

While Controller¶

The While Controller runs its children until the condition is "false".

Possible condition values:

- blank - exit loop when last sample in loop fails

- LAST - exit loop when last sample in loop fails. If the last sample just before the loop failed, don't enter loop.

- Otherwise - exit (or don't enter) the loop when the condition is equal to the string "false"

For example:

- ${VAR} - where VAR is set to false by some other test element

- ${__jexl3(${C}==10)}

- ${__jexl3("${VAR2}"=="abcd")}

- ${_P(property)} - where property is set to "false" somewhere else

Parameters ¶

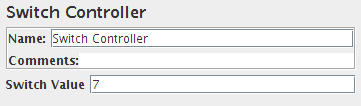

Switch Controller¶

The Switch Controller acts like the Interleave Controller in that it runs one of the subordinate elements on each iteration, but rather than run them in sequence, the controller runs the element defined by the switch value.

If the switch value is out of range, it will run the zeroth element, which therefore acts as the default for the numeric case. It also runs the zeroth element if the value is the empty string.

If the value is non-numeric (and non-empty), then the Switch Controller looks for the element with the same name (case is significant). If none of the names match, then the element named "default" (case not significant) is selected. If there is no default, then no element is selected, and the controller will not run anything.

Parameters ¶

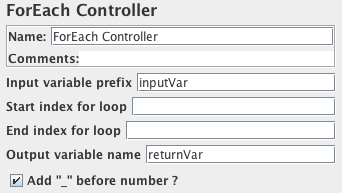

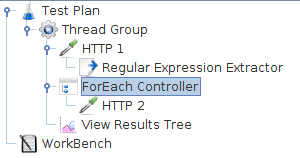

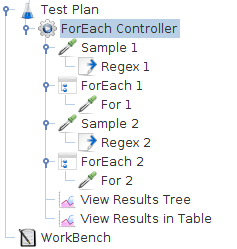

ForEach Controller¶

A ForEach controller loops through the values of a set of related variables. When you add samplers (or controllers) to a ForEach controller, every sample (or controller) is executed one or more times, where during every loop the variable has a new value. The input should consist of several variables, each extended with an underscore and a number. Each such variable must have a value. So for example when the input variable has the name inputVar, the following variables should have been defined:

- inputVar_1 = wendy

- inputVar_2 = charles

- inputVar_3 = peter

- inputVar_4 = john

Note: the "_" separator is now optional.

When the return variable is given as "returnVar", the collection of samplers and controllers under the ForEach controller will be executed 4 consecutive times, with the return variable having the respective above values, which can then be used in the samplers.

It is especially suited for running with the regular expression post-processor. This can "create" the necessary input variables out of the result data of a previous request. By omitting the "_" separator, the ForEach Controller can be used to loop through the groups by using the input variable refName_g, and can also loop through all the groups in all the matches by using an input variable of the form refName_${C}_g, where C is a counter variable.

Parameters ¶

Download this example (see Figure 7). In this example, we created a Test Plan that sends a particular HTTP Request only once and sends another HTTP Request to every link that can be found on the page.

We configured the Thread Group for a single thread and a loop count value of one. You can see that we added one HTTP Request to the Thread Group and another HTTP Request to the ForEach Controller.

After the first HTTP request, a regular expression extractor is added, which extracts all the html links out of the return page and puts them in the inputVar variable

In the ForEach loop, a HTTP sampler is added which requests all the links that were extracted from the first returned HTML page.

Here is another example you can download. This has two Regular Expressions and ForEach Controllers. The first RE matches, but the second does not match, so no samples are run by the second ForEach Controller

The Thread Group has a single thread and a loop count of two.

Sample 1 uses the JavaTest Sampler to return the string "a b c d".

The Regex Extractor uses the expression (\w)\s which matches a letter followed by a space, and returns the letter (not the space). Any matches are prefixed with the string "inputVar".

The ForEach Controller extracts all variables with the prefix "inputVar_", and executes its sample, passing the value in the variable "returnVar". In this case it will set the variable to the values "a" "b" and "c" in turn.

The For 1 Sampler is another Java Sampler which uses the return variable "returnVar" as part of the sample Label and as the sampler Data.

Sample 2, Regex 2 and For 2 are almost identical, except that the Regex has been changed to "(\w)\sx", which clearly won't match. Thus the For 2 Sampler will not be run.

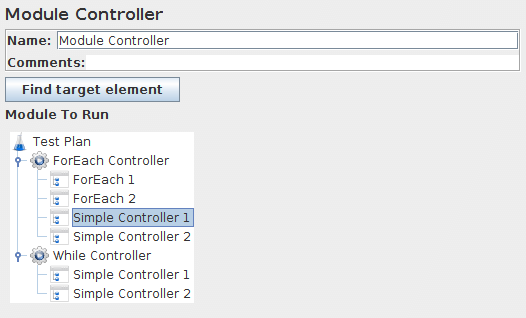

Module Controller¶

The Module Controller provides a mechanism for substituting test plan fragments into the current test plan at run-time.

A test plan fragment consists of a Controller and all the test elements (samplers etc.) contained in it. The fragment can be located in any Thread Group. If the fragment is located in a Thread Group, then its Controller can be disabled to prevent the fragment being run except by the Module Controller. Or you can store the fragments in a dummy Thread Group, and disable the entire Thread Group.

There can be multiple fragments, each with a different series of samplers under them. The module controller can then be used to easily switch between these multiple test cases simply by choosing the appropriate controller in its drop down box. This provides convenience for running many alternate test plans quickly and easily.

A fragment name is made up of the Controller name and all its parent names. For example:

Test Plan / Protocol: JDBC / Control / Interleave Controller (Module1)

Any fragments used by the Module Controller must have a unique name, as the name is used to find the target controller when a test plan is reloaded. For this reason it is best to ensure that the Controller name is changed from the default - as shown in the example above - otherwise a duplicate may be accidentally created when new elements are added to the test plan.

Parameters ¶

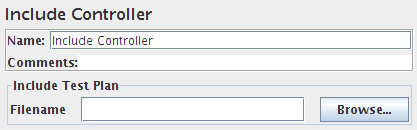

Include Controller¶

The include controller is designed to use an external JMX file. To use it, create a Test Fragment underneath the Test Plan and add any desired samplers, controllers etc. below it. Then save the Test Plan. The file is now ready to be included as part of other Test Plans.

For convenience, a Thread Group can also be added in the external JMX file for debugging purposes. A Module Controller can be used to reference the Test Fragment. The Thread Group will be ignored during the include process.

If the test uses a Cookie Manager or User Defined Variables, these should be placed in the top-level test plan, not the included file, otherwise they are not guaranteed to work.

However, if the property includecontroller.prefix is defined, the contents are used to prefix the pathname.

If the file cannot be found at the location given by prefix+Filename, then the controller attempts to open the Filename relative to the JMX launch directory.

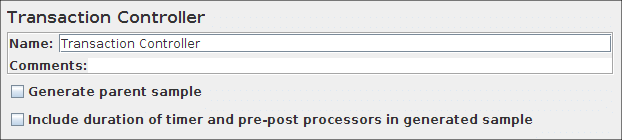

Transaction Controller¶

The Transaction Controller generates an additional sample which measures the overall time taken to perform the nested test elements.

There are two modes of operation:

- additional sample is added after the nested samples

- additional sample is added as a parent of the nested samples

The generated sample time includes all the times for the nested samplers excluding by default (since 2.11) timers and processing time of pre/post processors unless checkbox "Include duration of timer and pre-post processors in generated sample" is checked. Depending on the clock resolution, it may be slightly longer than the sum of the individual samplers plus timers. The clock might tick after the controller recorded the start time but before the first sample starts. Similarly at the end.

The generated sample is only regarded as successful if all its sub-samples are successful.

In parent mode, the individual samples can still be seen in the Tree View Listener, but no longer appear as separate entries in other Listeners. Also, the sub-samples do not appear in CSV log files, but they can be saved to XML files.

Parameters ¶

Recording Controller¶

The Recording Controller is a place holder indicating where the proxy server should record samples to. During test run, it has no effect, similar to the Simple Controller. But during recording using the HTTP(S) Test Script Recorder, all recorded samples will by default be saved under the Recording Controller.

Parameters ¶

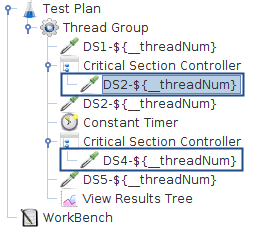

Critical Section Controller¶

The Critical Section Controller ensures that its children elements (samplers/controllers, etc.) will be executed by only one thread as a named lock will be taken before executing children of controller.

The figure below shows an example of using Critical Section Controller, in the figure below 2 Critical Section Controllers ensure that:

- DS2-${__threadNum} is executed only by one thread at a time

- DS4-${__threadNum} is executed only by one thread at a time

Parameters ¶

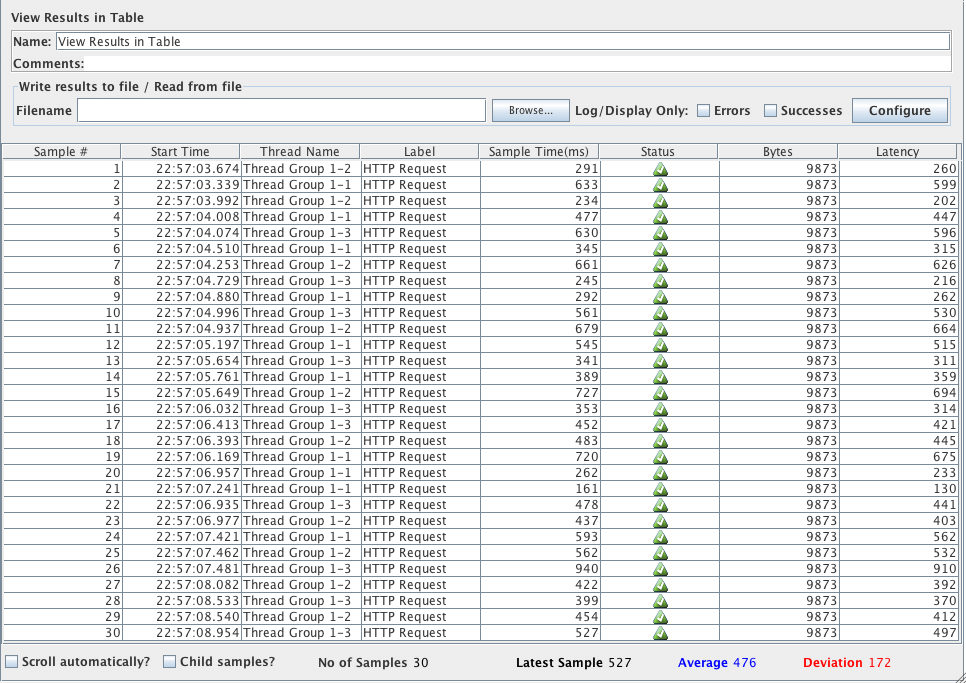

18.3 Listeners¶

Most of the listeners perform several roles in addition to "listening" to the test results. They also provide means to view, save, and read saved test results.

Note that Listeners are processed at the end of the scope in which they are found.

The saving and reading of test results is generic. The various listeners have a panel whereby one can specify the file to which the results will be written (or read from). By default, the results are stored as XML files, typically with a ".jtl" extension. Storing as CSV is the most efficient option, but is less detailed than XML (the other available option).

Listeners do not process sample data in CLI mode, but the raw data will be saved if an output file has been configured. In order to analyse the data generated by a CLI run, you need to load the file into the appropriate Listener.

If you want to clear any current data before loading a new file, use the menu item or before loading the file.

Results can be read from XML or CSV format files. When reading from CSV results files, the header (if present) is used to determine which fields are present. In order to interpret a header-less CSV file correctly, the appropriate properties must be set in jmeter.properties.

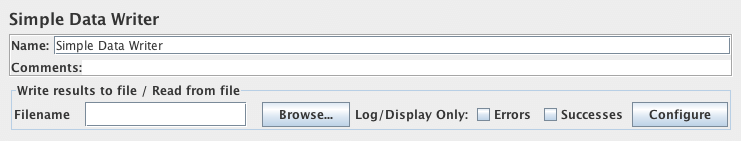

Listeners can use a lot of memory if there are a lot of samples. Most of the listeners currently keep a copy of every sample in their scope, apart from:

- Simple Data Writer

- BeanShell/JSR223 Listener

- Mailer Visualizer

- Summary Report

The following Listeners no longer need to keep copies of every single sample. Instead, samples with the same elapsed time are aggregated. Less memory is now needed, especially if most samples only take a second or two at most.

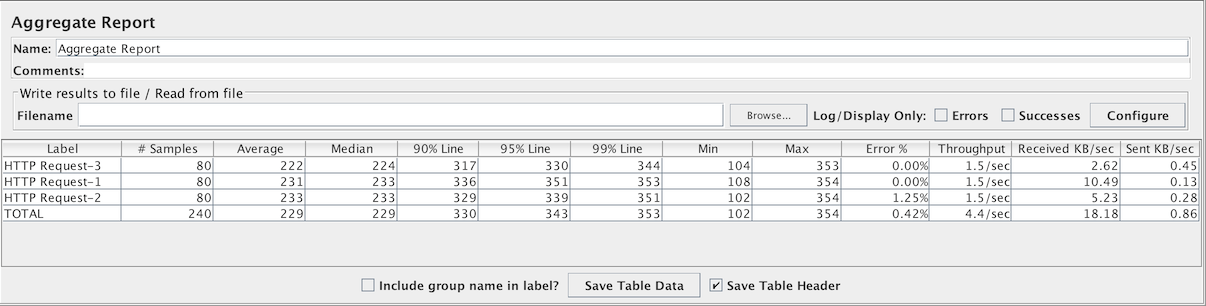

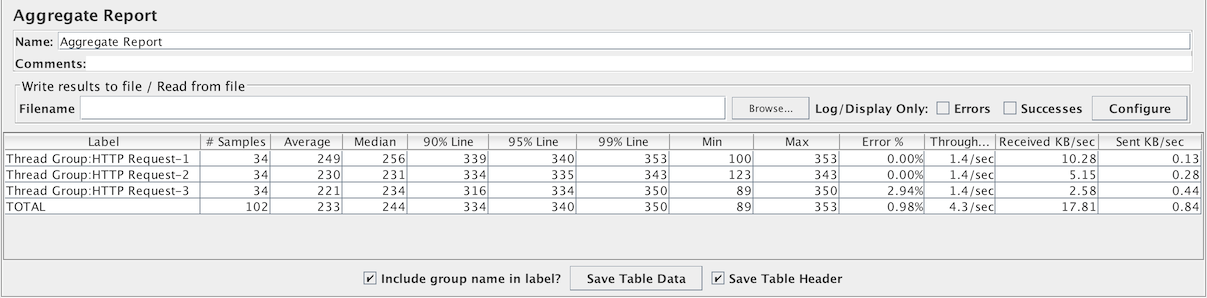

- Aggregate Report

- Aggregate Graph

To minimise the amount of memory needed, use the Simple Data Writer, and use the CSV format.

For full details on setting up the default items to be saved see the Listener Default Configuration documentation. For details of the contents of the output files, see the CSV log format or the XML log format.

The figure below shows an example of the result file configuration panel

Parameters

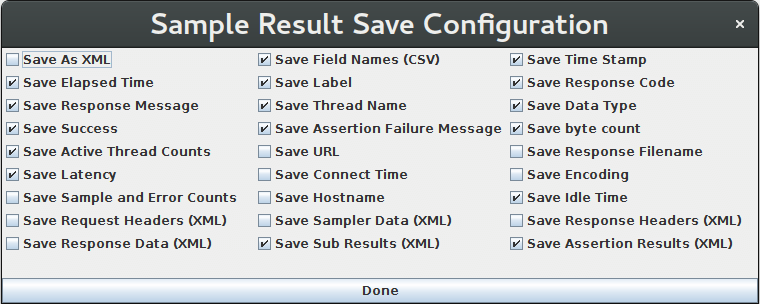

Sample Result Save Configuration¶

Listeners can be configured to save different items to the result log files (JTL) by using the Config popup as shown below. The defaults are defined as described in the Listener Default Configuration documentation. Items with (CSV) after the name only apply to the CSV format; items with (XML) only apply to XML format. CSV format cannot currently be used to save any items that include line-breaks.

Note that cookies, method and the query string are saved as part of the "Sampler Data" option.

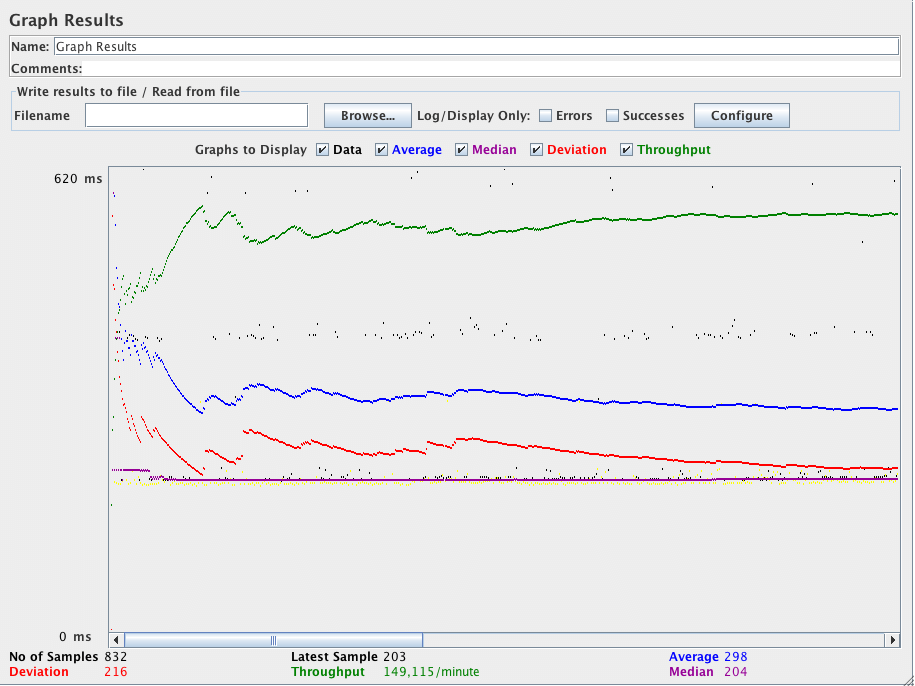

Graph Results¶

The Graph Results listener generates a simple graph that plots all sample times. Along the bottom of the graph, the current sample (black), the current average of all samples (blue), the current standard deviation (red), and the current throughput rate (green) are displayed in milliseconds.

The throughput number represents the actual number of requests/minute the server handled. This calculation includes any delays you added to your test and JMeter's own internal processing time. The advantage of doing the calculation like this is that this number represents something real - your server in fact handled that many requests per minute, and you can increase the number of threads and/or decrease the delays to discover your server's maximum throughput. Whereas if you made calculations that factored out delays and JMeter's processing, it would be unclear what you could conclude from that number.

The following table briefly describes the items on the graph. Further details on the precise meaning of the statistical terms can be found on the web - e.g. Wikipedia - or by consulting a book on statistics.

- Data - plot the actual data values

- Average - plot the Average

- Median - plot the Median (midway value)

- Deviation - plot the Standard Deviation (a measure of the variation)

- Throughput - plot the number of samples per unit of time

The individual figures at the bottom of the display are the current values. "Latest Sample" is the current elapsed sample time, shown on the graph as "Data".

The value displayed on the top left of graph is the max of 90th percentile of response time.

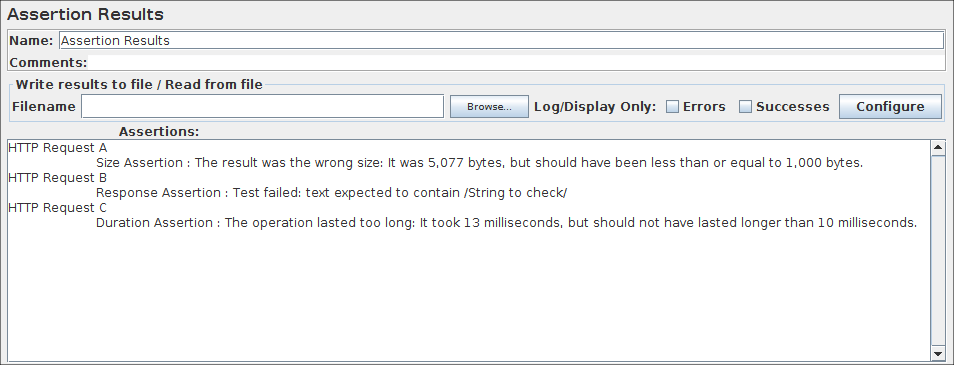

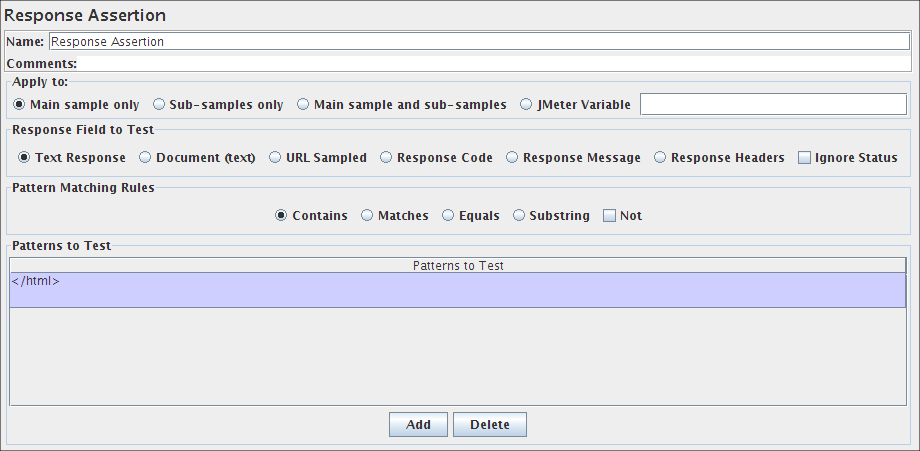

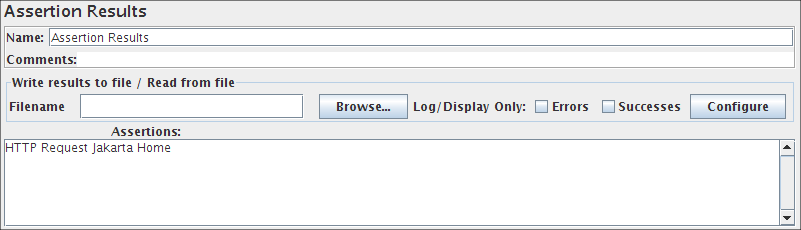

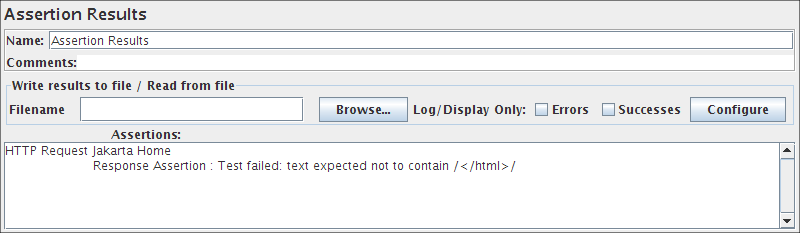

Assertion Results¶

The Assertion Results visualizer shows the Label of each sample taken. It also reports failures of any Assertions that are part of the test plan.

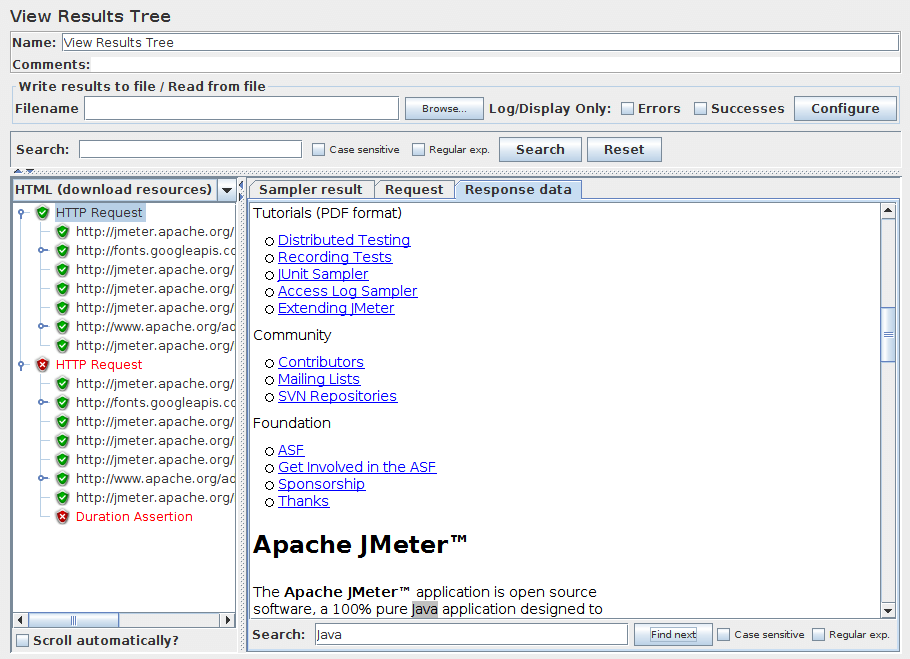

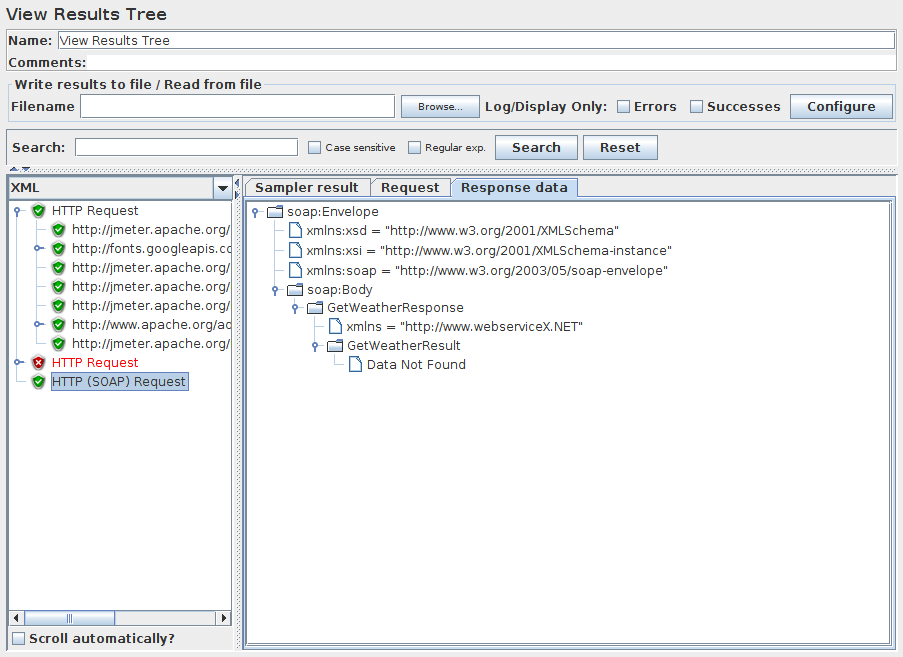

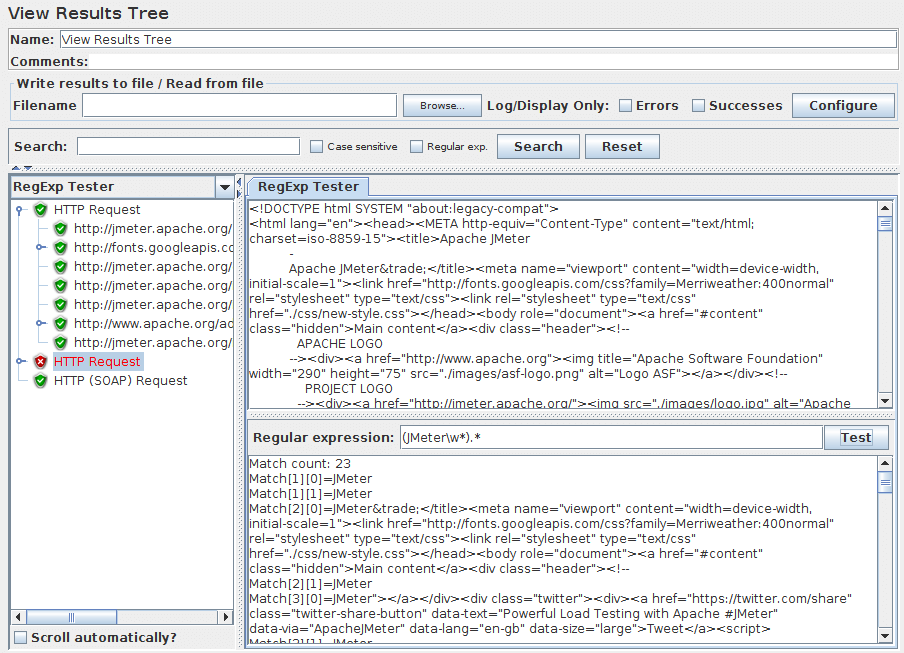

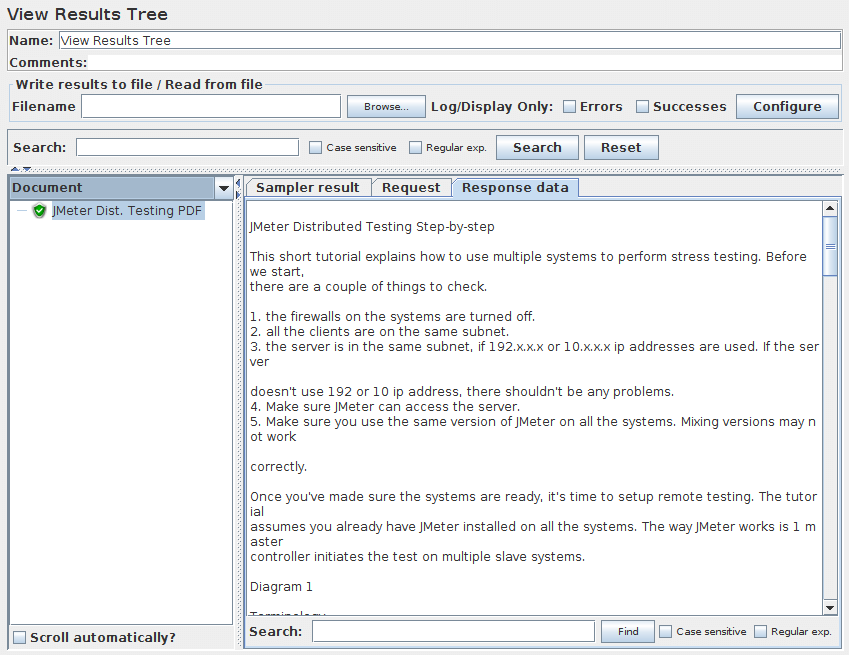

View Results Tree¶